95% of enterprise AI pilots fail.

MIT’s estimate may be debated, but the reality is not.

Companies have poured $30-40 billion into projects and only a handful have delivered real returns. The models aren’t the issue, they work and they’re improving fast. The problem is the product. Pilots look great in a demo, but then collapse once they hit real workflows. Until AI earns trust, fits into routines and solves actual problems, it’s just an expensive prototype.

Adoption also remains painfully low. Only 9.7% of U.S. firms report using AI in production as of mid-2025, up from only 3.7% in 2023.

For product leaders, the hard truth is that the barrier isn’t technical, it’s product. Success won’t be judged by how many models you deploy or how sharp your benchmarks look. It’ll be judged by whether people use it, trust it and keep coming back.

Why pilots don’t scale

Individual adoption is surging, 40% of US workers now use AI on the job, up from 20% two years ago. But inside enterprises, the story is very different.

More than 80% of organisations have piloted generative AI, nearly 40% claim deployment, yet only 5% have made it part of core workflows. That’s why billions in investment have produced so little return.

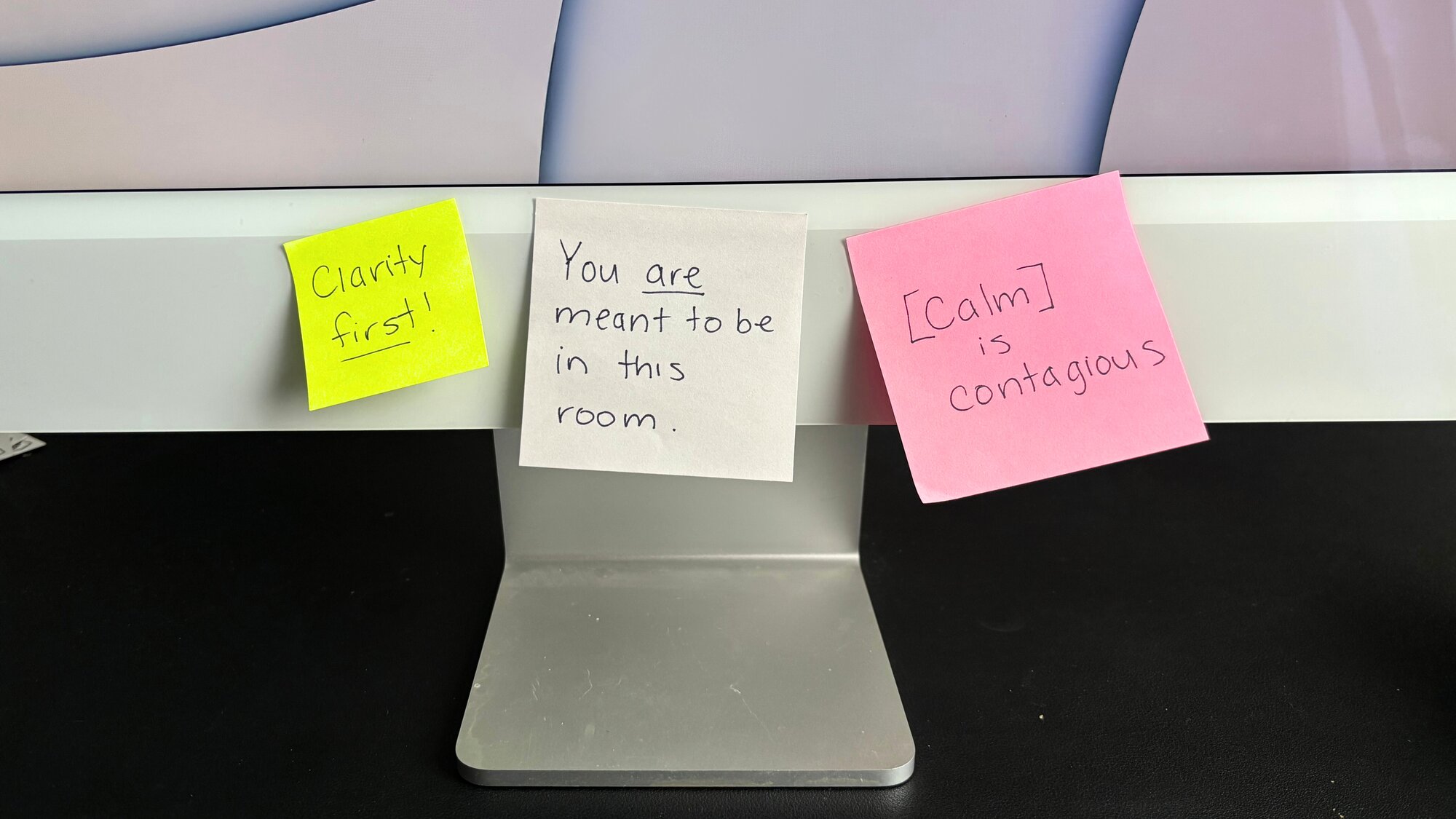

In contrast, consumer tools like ChatGPT and Copilot work because they fit naturally into daily habits. They’re intuitive, flexible, and require no training, allowing users to experiment, make mistakes, and quickly learn without consequence. Enterprise tools fail for the opposite reason: they don’t fit workflows. They feel disconnected, ignore permission structures and demand extra effort without delivering enough value. Once people feel they must double-check every output, what researchers call the verification tax, adoption drops fast.

Why AI products fail

At PwC, I helped build the Generative AI capability from the ground up, leading an adoption programme that reached tens of thousands of employees. What I learned fast is that adoption isn’t about launching tools, it’s about fluency. You need a baseline of literacy across the business with pilots anchored to real, specific use cases. Otherwise, tools built on incorrect use cases won’t be used as people won’t understand how and when to apply them. Adoption happens when tools are part of how people actually work.

Across multiple enterprise projects, I’ve seen the same three breakdowns repeatedly derail progress: systems that don’t learn, resources chasing the wrong use cases and pilots launched without alignment to workflows.

1. The Learning Gap

Most enterprise AI is deployed in a fixed state, with little ability to adapt or incorporate feedback into future responses. As soon as a confidently delivered answer proves unreliable, trust drops and the verification tax kicks in, employees spend more time validating the system’s work than benefiting from it.

We experienced this with an audit-focused pilot at PwC. The model often retrieved the right keywords but lacked the context auditors needed. Early versions gave generic or outdated context and a single confidently wrong answer decreased confidence levels in users. Adding abstentions, confidence levels and improved grounding helped rebuild trust.

For PMs: The answer is to design with humility. Make it clear when the system is unsure, allow it to say “I don’t know,” and ensure every correction feeds back into the model. That’s how the accuracy flywheel forms, mistakes turn into learning, and learning slowly rebuilds trust.

2. Misallocated Resources

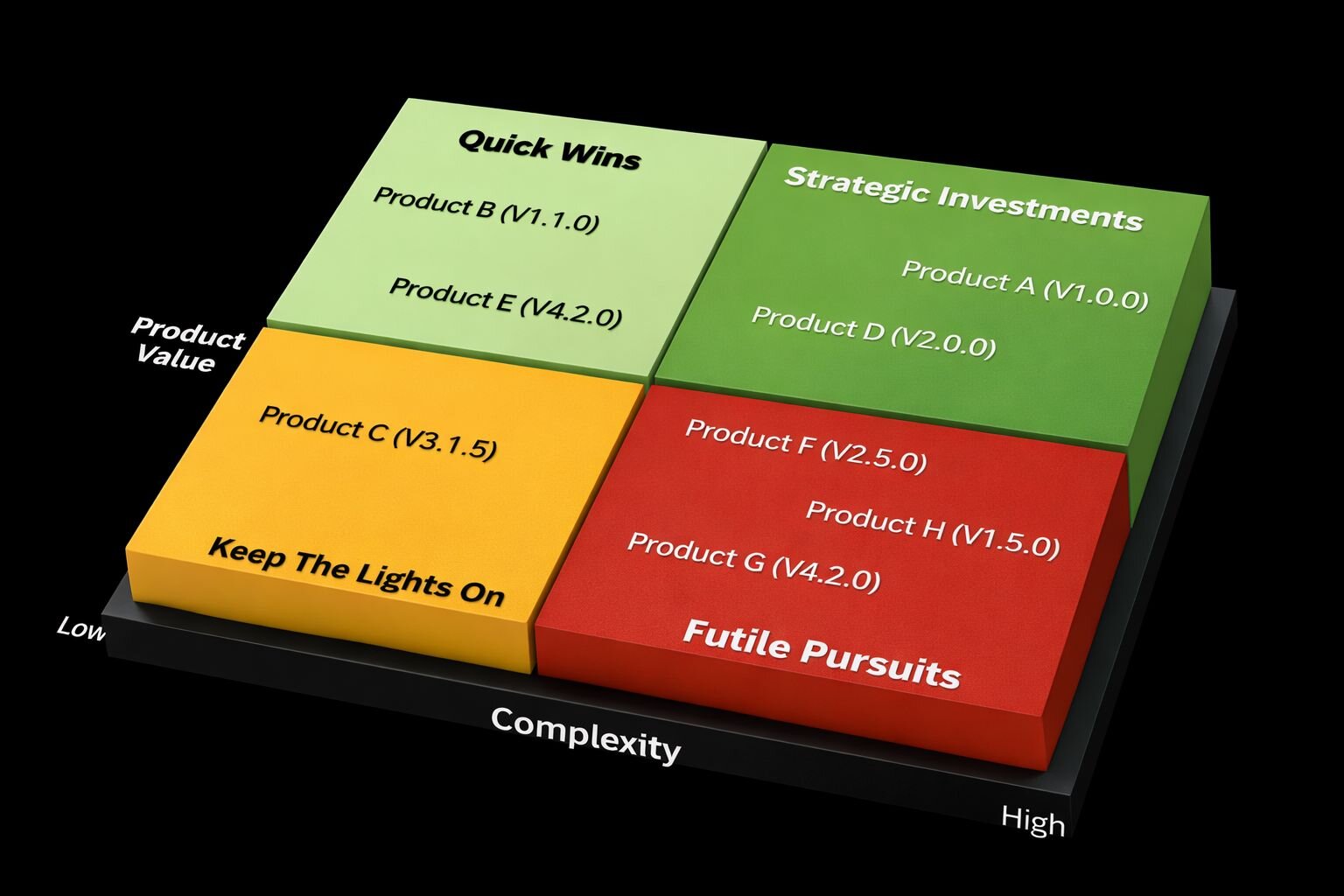

More than half of AI budgets go to sales and marketing pilots because they look good in a demo. However, the real ROI sit in “boring”, unglamorous everyday workflows where adoption sticks.

Our team supported more than 200 exploratory pilots across finance, audit, HR and consulting. Many of the high-visibility projects demoed beautifully but struggled to progress because the value sat with only a handful of users or the business couldn’t mobilise behind them. In contrast, some of the least glamorous workflows like a CV-generation tool in HR delivered immediate, tangible ROI and quietly became some of the most-used prototypes internally.

For PMs: The lesson here is to resist the temptation to prioritise the flashiest use case. Real value usually sits in the quiet, essential workflows, the ones that may not demo well but solve daily pain points.

3. Lack of Alignment

Many pilots stalled not because of the model, but because no one could agree on who owned the workflow. We often had to navigate long chains of stakeholders, shifting points of contact, and use cases without enough detail about the problem or data available. Without alignment on permissions, roles and handoffs, pilots lost momentum. Once we standardised a simple workflow contract, adoption became far smoother because everyone understood how the tool fit into their day-to-day work.

For PMs: Write a simple workflow agreement that clarifies who uses the tool, what permissions are needed, and where the handoffs happen. Without that alignment, even the strongest pilots struggle to gain traction. With it, the system becomes almost invisible, in the best possible way, because it fits seamlessly into how people already work.

Hidden Blockers

Even when you avoid the obvious pitfalls, two hidden blockers often undermine adoption: the verification tax and data architecture.

1. Verification Tax

You’ve seen this before: a system gives a confident answer that turns out to be wrong. Trust starts to slip, and people compensate by double-checking every output, often spending more time validating the tool than the tool ever saves them. The only way through is to build transparency into the experience by allowing the system to show uncertainty and abstain when it doesn’t know, and make it clear how user corrections improve future responses. When people can see the system learning, trust has room to grow rather than erode.

2. Data Architecture

Many pilots rely on copying data into new environments, which is fine for a demo but quickly falls apart at scale. Permissions break, workflows slow down and security concerns multiply. A more sustainable approach is to keep data where it already lives and bring the AI to it. This zero-copy pattern preserves governance, maintains existing access controls, and allows the tool to sit naturally inside real workflows instead of working around them.

The Buy vs Build Reality

It’s tempting to build AI tools in-house, after all, no one knows your business better than you. But data shows that internal builds reach production only about a third of the time, while projects with external partners succeed twice as often.

For PMs: The goal isn’t to outsource everything, but to strike the right balance. Internal teams are best placed to define the workflows, understand the business needs, and shape the real problems worth solving.External partners bring the practical experience of scaling, everything from integrations to compliance to hardened processes. It helps to hold vendors to a clear standard that they should align with your access controls, avoid keeping hidden data, surface uncertainty and offer audit logs out of the box. When those pieces are missing, it’s usually a sign the solution isn’t ready to scale.

The Pilot-to-Product Playbook

Accuracy in the lab doesn’t matter if nobody uses the product in the real world. Most AI pilots fail because they’re treated like science projects. Product leaders need a clear path from prototype to production.

Conclusion

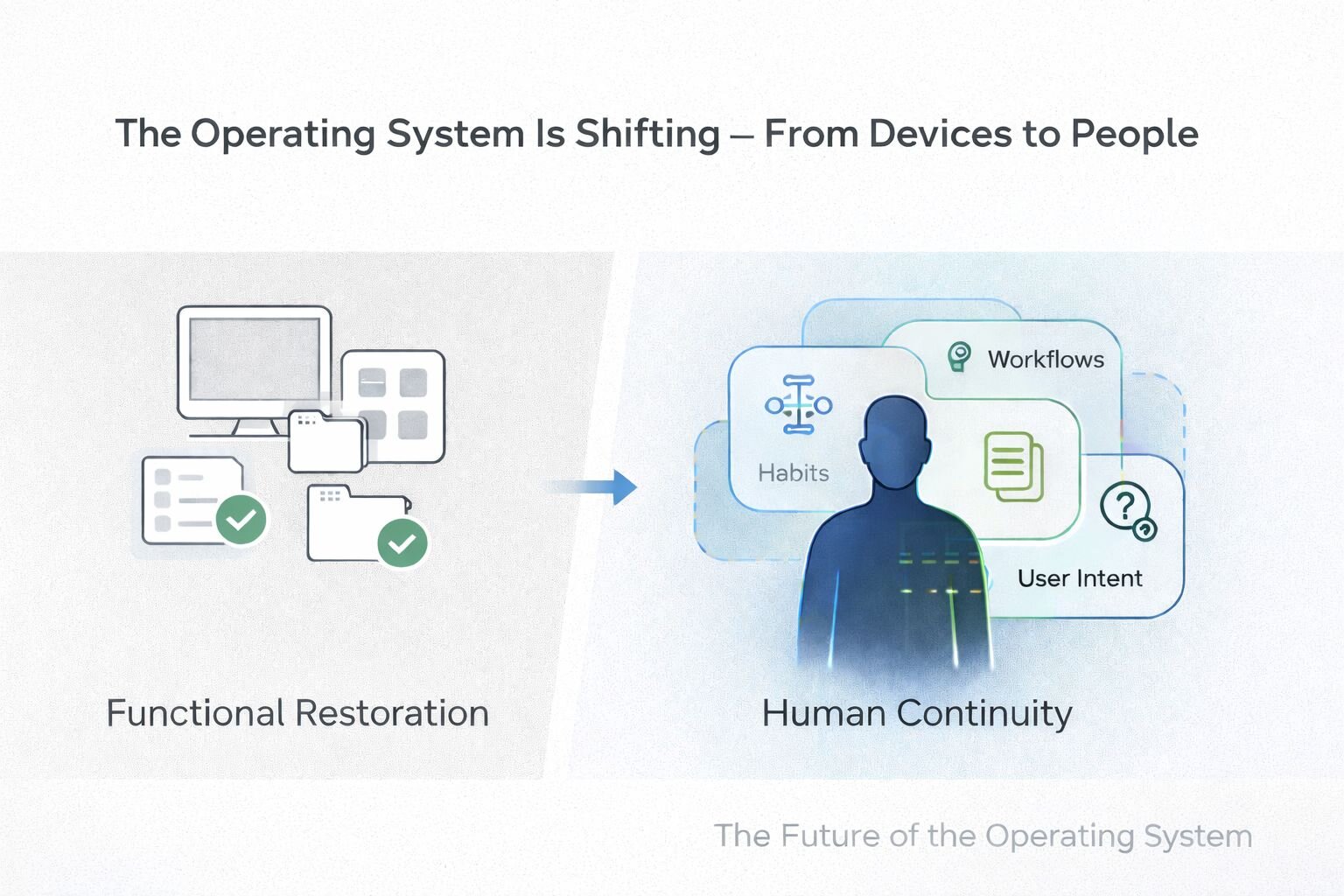

Ultimately, the success of enterprise AI won’t be measured by how many models you deploy, but by whether people actually use the product, trust it, and return to it in the flow of their daily work. Most failures trace back to the same roots of a lack of trust, weak fit with existing workflows or too much friction in the experience.

The real work of the product leader is to reverse those patterns by designing for trust, grounding tools that fit naturally into daily work and proving adoption with every release. When you get it right, you join the small group of organisations who manage to scale. Otherwise, even the most advanced system ends up as an expensive prototype.

Sources: MIT Sloan Management Review, Anthropic’s Economic Index, Fortune, Pew Research, MLQ.ai