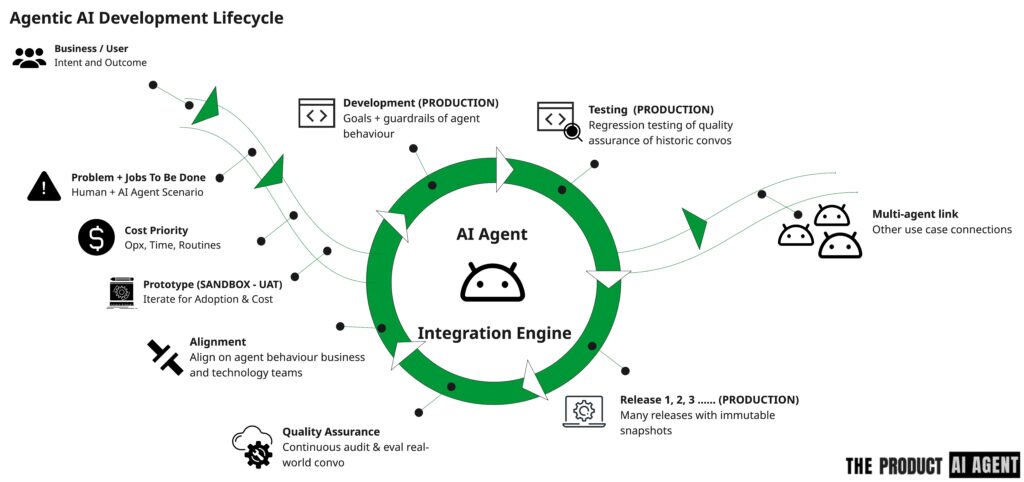

AI agents are gaining traction in product teams, but many are failing to deliver beyond a proof-of-concept. Why? This happens because most teams are using outdated methods. In this article, you'll learn five core skills I’ve learnt that product managers need to develop to deliver scalable, explainable AI agents that can operate in real-world environments.

Why most product managers fail at agentic AI

It was a Wednesday evening, and my friend Lizzie popped up on WhatsApp. She had sent through a fast and flustered voice note:

"We've got the green light on our first agent. It's exciting, but… no one knows what we're building."

I've worked with Lizzie on and off for years. She’s always been the driver on direction, but this time she was working with something that changed everything – a piece of software that thinks for itself – we call them AI agents of course.

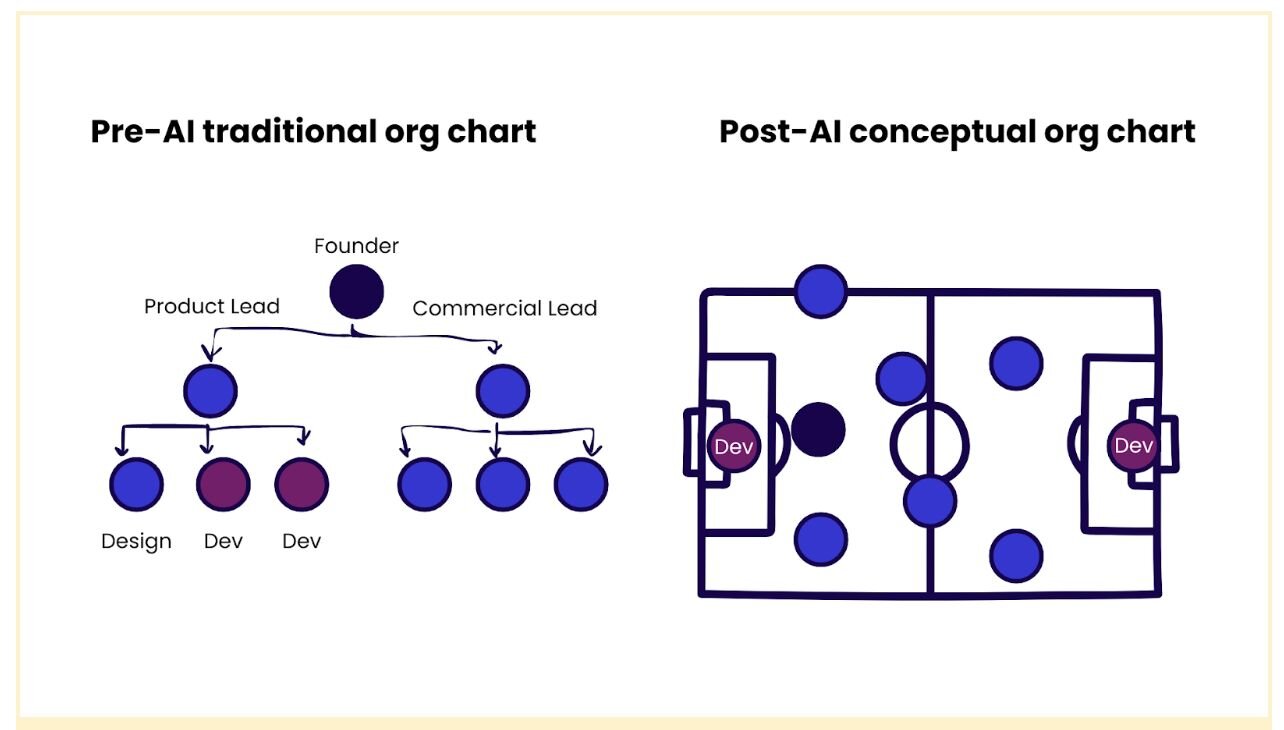

Whilst Lizzie’s team had previously worked on chatbots for marketing, when leadership asked them to explore agentic AI, they assumed it would be more of the same—some prompts, some automation logic, some flow diagrams.

But quickly, everything stalled. The designer didn't know what to sketch. The data team didn't know what to prepare. The engineers wanted to see a prototype. This is why Lizzie had reached out. The software development lifecycle had let her down.

Why does most agentic AI fail before it ships?

When we started to unpack the real problem (delivering on time, not disappointing executives), I asked Lizzie about her approach based on my failings with agentic AI:

"Have you figured out what knowledge your agent needs to make decisions?"

There was a long pause. This is where most agentic projects collapse, not because of the AI model but because product managers treat agents like traditional software features. But agents don't live in workflows. They live in systems. They need a new kind of software classification.

We need to stop thinking about features and start thinking about decisions. Agentic AI is not UI-first; it's cognition-first.

When you get this wrong, the symptoms are apparent. Teams build beautiful prototypes that fall apart at scale. Executive sponsors lose confidence. And product leaders burn weeks trying to retrofit structure onto what was, at best, a glorified prompt.

I've seen this play out in both startups and global enterprises. The good news? It's fixable.

Here’s how I helped Lizzie reframe her role as a product manager dealing with agentic AI:

1. Treat knowledge as infrastructure

In most companies, expert knowledge is dispersed across various channels, including slide decks, process documents, email chains, and informal conversations over coffee. For an agent to function, tribal knowledge needs to become structured, retrievable, and usable in real-time.

When we built agents for a B2B contract use case, it didn't start with prompts. We began with workshops to extract the judgments and steps sales managers took daily. This wasn't glamorous work; it was diagramming the decisions someone made in their head.

We used techniques from information architecture, decision trees, and systems thinking. One team member said it felt more like redesigning a brain than designing software, which is precisely how you should approach designing agentic systems.

This approach helped everyone, from engineering to compliance, understand the agent's logic, test its limits, and iterate responsibly.

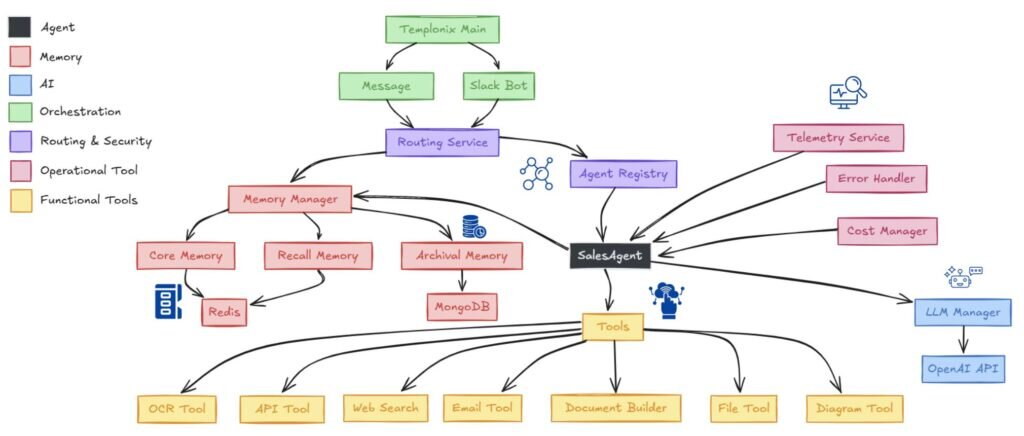

2. Use Jobs to Be Done to design memory

Many people believe the LLM is the brain of an agentic system. It isn't. It's just the voice. The real intelligence comes from memory, the context the agent carries from one interaction to the next. But memory must be designed around the specific role and context of the user the agent serves.

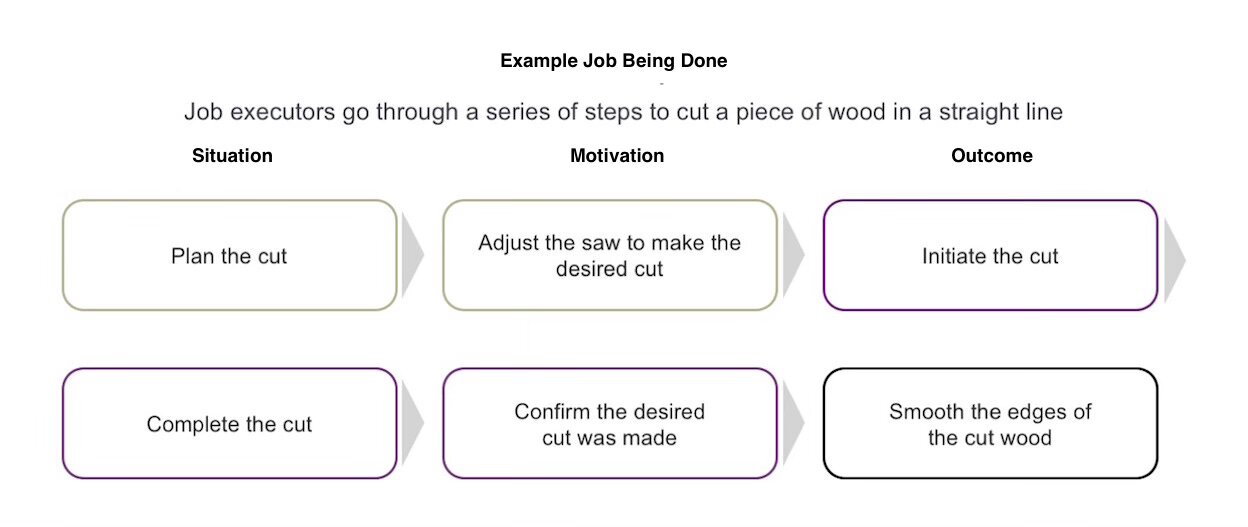

This is where the Jobs to Be Done (JTBD) framework saved us. Instead of thinking about features, we asked:

- What situation is the user in?

- What goal are they trying to achieve?

- What does success look like?

That gave us three requirements for the memory function of the agentic system:

- Core memory (the current job)

- Recall memory (what just happened)

- Archival memory (what has worked well before)

Once we had established this structure, we could build memory components that supported the agent's decision-making, rather than just retaining data for its own sake.

3. Model cost from day zero

Here's the part every product team isn’t ready for: agents cost money every time they think. I learned this when an early prototype burned through its token limit after just a few hours. We hadn't anticipated how often the agent would hit external APIs or how long the prompt chains would be. The tech worked—but the economics didn't.

So we started building in cost modelling, just like you'd model out infrastructure or cloud costs:

- How many tokens per task?

- How many prompts per job?

- What's the latency on each API?

- What's the cost per successful outcome?

We even ran a lightweight Net Present Value (NPV) analysis to justify the effort to leadership. Without that, we would have launched a proof-of-concept that failed at scale.

More than once, this analysis helped us say no to flashy but unsustainable use cases. It's better to redirect effort early than sink engineering cycles into systems no one can afford to run.

4. Build governance into the design

One of the biggest mistakes I see is teams bolting on compliance after the agent is already in production. But if your agent can make decisions, trigger actions, or access data, governance isn't a legal requirement; it's a design input.

For a healthcare client, we defined policies before creating the prompts:

- Who could use the agent?

- What data was allowed?

- When should it be handed off to a human?

These questions shaped the prompts, not the other way around.

We separated policy (the rule) from enforcement (the logic). That way, legal, IT, and product could all understand what the agent was allowed to do—and update it safely.

Good governance isn't a blocker; it's the playbook that helps your agent scale with confidence. It makes stakeholders say yes, not maybe.

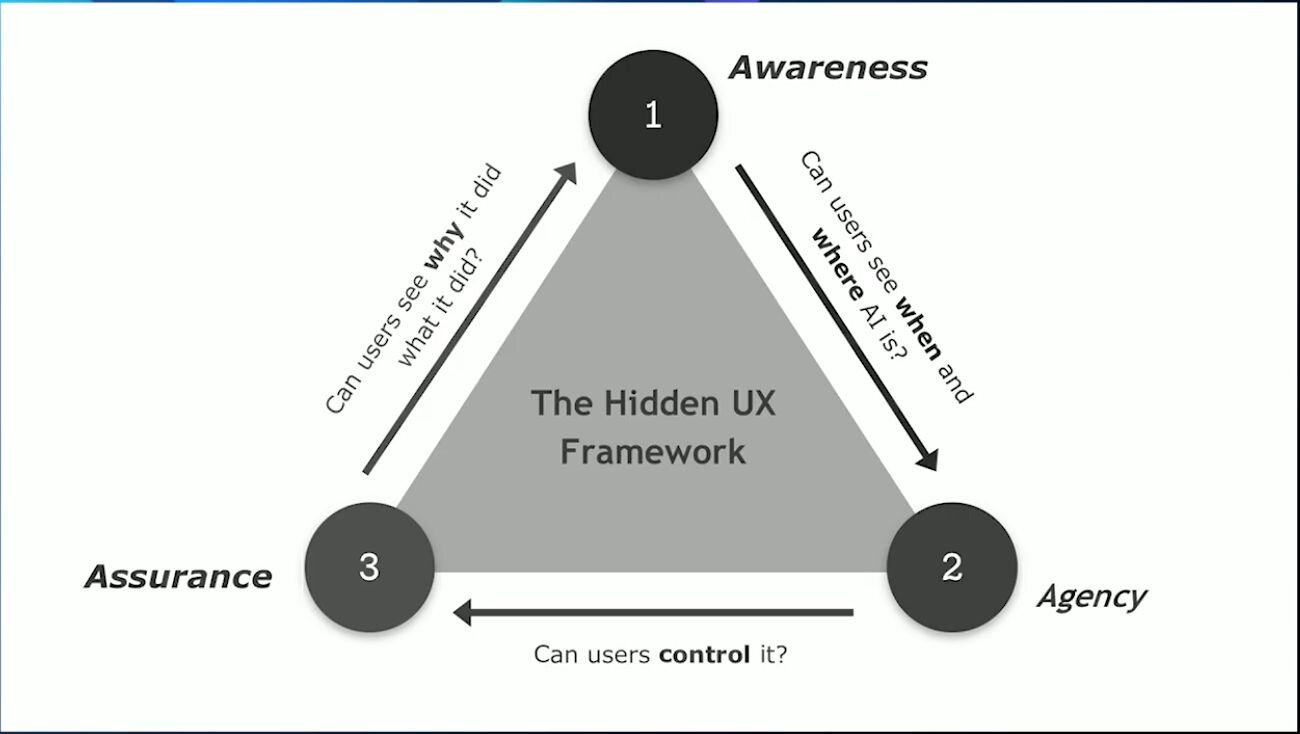

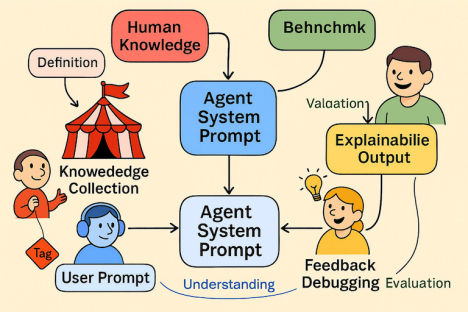

5. Make explainability part of your acceptance criteria

People won't trust what they can't understand. In our B2B contract project, we saw this

firsthand.

The agent was trained to screen contracts for renewal updates. It worked, but stakeholders

continued to ask, "Why did it change these terms and conditions?"

So, we introduced graph-backed reasoning and confidence scores. The agent didn't just say

"yes" or "no". It said:

"Contract used CRM contract data and new law changes 2.1 based on the legal team's recent

lawsuit. Confidence: 92%."

That one feature, explainability, unlocked adoption. Suddenly, legal, ops and leadership trusted

the system.

We also added manual override logic. When the agent's confidence dropped, it was handed over to a human, with an explanation of what it tried. That little signal transformed how teams perceived risk.

What this means for product managers

With AI, we're building intelligent systems.

And that means we need to:

1. Codify expert logic, not just workflows.

2. Architect memory around jobs, not screens.

3. Model costs like an operating system.

4. Treat governance as architecture.

5. Bake explainability into every release.

Agentic AI changes the craft of product management. It encourages us to think in systems terms, design effective decisions, and apply economic principles. And that's precisely where we need to be if we want AI to work outside the lab and inside the enterprise.

If I've learned one thing, it's this: you don't ship prompts; you ship trust. Trust is built on knowledge, memory, cost, policy, and transparency. These are the new foundations. It's time we mastered them.