Agentic AI is quickly becoming the industry's new buzzword. You have probably come across examples already with tools that book meetings, file expense reports, and even troubleshoot technical issues. If you manage AI products, you have probably been asked at some point, 'Can we build an agent for this?'"

The better question is, should you?

Not every task benefits from the complexity of an agentic system. Knowing when to say yes and when to hold the line is quickly becoming one of the most important product decisions you will make in AI-native product development.

In this article, I will explain what makes agentic AI different, when to use it, and how product managers can thoughtfully navigate this new, high-stakes terrain. Along the way, I will share a few stories I have come across through other product managers and in my own work.

What Is Agentic AI, Really?

Most people are familiar with Generative AI applications through chatbots like ChatGPT and Gemini that answer questions, summarizers that condense long texts, and image generators that create visuals on demand. These systems are powerful, but they are largely limited to generating content in response to prompts.

Agentic AI goes a step further. It can not only generate content, but also execute a whole workflow while iterating the approach and making decisions along the way. It is a system that can:

- Plan multi-step actions

- Make decisions dynamically based on context

- Use tools and APIs to act on the world

- Maintain memory and adapt its plan as it moves toward a goal

Imagine an Agentic AI travel planner is asked to find a budget-friendly beach vacation in July. The agent starts a dynamic workflow. It uses a tool (a weather API) to rule out destinations like the Caribbean and then checks live flight prices to the remaining options. If it finds a great flight to the Algarve, Portugal, it then uses a hotel API and dynamically decides the local accommodation costs are too high, violating the "budget-friendly" constraint. Using its memory of all prior checks, it pivots to the next-best destination, the Canary Islands. It continues this iterative process, checking availability, re-evaluating the budget, and taking practical action, until the complete, bookable goal is achieved. Once a viable plan is formed, the agent doesn't just display it, it uses an API to share the proposed plan directly with the user's family members.

For product managers, this changes the approach. Designing for generative AI often centers on prompt quality, data grounding, and output accuracy. Designing for agentic AI requires thinking in terms of goals, workflows, decision boundaries, and integration into real-world systems. The product is no longer just answering questions, it is taking action. The stakes are also much higher and the design needs more safeguards to prevent errors and unintended consequences.

When Should You Use an AI Agent?

Here is something I learned the hard way - just because you can build an agent does not mean you should. Agentic systems are powerful, but they introduce a level of non-linearity that can make failures opaque and costly to debug. One of the most important decisions in AI-native product development is knowing when the complexity of an agent is warranted and when a simpler approach will serve better.

To evaluate whether an agent is the right fit, I rely on three guiding questions:

- Is the task multi-step or branching? Does it require orchestrating a sequence of dependent actions?

- Does it require access to tools or memory? Will the system need to retrieve data, track state, or call APIs in real time?

- Is the flow highly variable? Will the execution path change based on user input or evolving context?

If the answer is yes to at least two of these, and the success criteria are both clear and objectively measurable, an agentic approach might be justified. If not, a lighter LLM-powered feature mixed in with some rules-driven workflow is usually more practical. Such solutions are faster to implement, easier to maintain, and significantly less prone to cascading errors.

Agents can undoubtedly create value, but their complexity must be matched to the problem at hand. Otherwise, the additional reasoning and orchestration they bring can introduce more risk than reward. And if we decide to use AI agents, especially for high risk use cases, it is recommended to do a progressive rollout and add appropriate human oversight.

Product Management Challenges for Agentic AI

If you move forward with an agent, prepare yourself. The product challenges are very different from traditional feature development. Here are a few things to consider:

1. Defining Clear Goals and Guardrails

When your AI can act autonomously, you have to clearly define what “success” looks like and what it doesn’t. Also, what is an acceptable path to achieve it and what is not.

One PM shared how their agent’s task was to “optimize meeting schedules”, but it started canceling recurring one-on-ones and Friday social hours to maximize focus time. Technically efficient, socially catastrophic.

Lesson: Be painfully specific about what the agent should optimize for.

2. Managing Memory and State

Agents need memory to keep track of context across steps. And that can get messy fast, as privacy, storage, and security all come into play. You can imagine what happens if an agent meant to summarize meetings accidentally pulls in confidential info from some unrelated session.

That’s exactly what happened in one case I came across. The team hadn’t fully thought through how memory should be managed, and it caused a mess.

Lesson: Figure out early what the agent should remember, for how long, and under what controls. Keep it simple and safe.

3. Orchestrating Complex, Fragile Systems

Most agentic products depend on APIs, databases, and third-party tools. One agentic case I came across was when an internal agent could book travel until an API outage left users stranded mid-booking. Recovery paths and fallback plans matter more than ever.

Lesson: Agents require a rigorous, scenario-based operational plan.

4. Redefining KPIs and Success Metrics

Clicks and conversion rates don’t tell the whole story. You have to dig deeper than that. Check task success rates, notice where things tend to fail, see how often someone has to step in, and get a feel for whether users actually trust the agent.

It helps to think about these numbers as clues. They show what’s working, and more importantly, what’s not. Having explainability built into the system helps build user trust and identify the problem areas.

A Playbook for Product Managers Building Agentic AI

So how do you navigate this? Here is a practical approach based on my own experience and on advice from AI PMs in the field:

1. Start small and take it slow

Begin with simple, low-risk tasks. Let the agent just suggest stuff at first; don’t let it actually do anything yet. Once it’s handling that well, you can let it take on a little more by itself.

2. Make it a co-pilot, not a black box

You want users to see what’s going on. They should be able to approve, tweak, or even ignore actions. The AI should feel like a helpful teammate, not some mysterious thing running on its own.

3. Expect it to mess up

And it will, at some point. Have a clear fallback plan and make sure everyone knows who steps in when things go sideways. Plan for the failures up front, so they don’t turn into crises. Resilience over perfection is the way to go.

4. Test in the real world, not just the lab

Agents often behave differently once they leave a clean test environment. I have seen one work perfectly in staging, then completely break when it hits a strangely formatted email in production. Expect surprises, and build for them.

5. Be Ruthlessly Clear About What Agents Can and Cannot Do

Update documentation and internal playbooks regularly. Ensure every stakeholder knows the agent’s current scope, limits, and approval triggers.

In conclusion

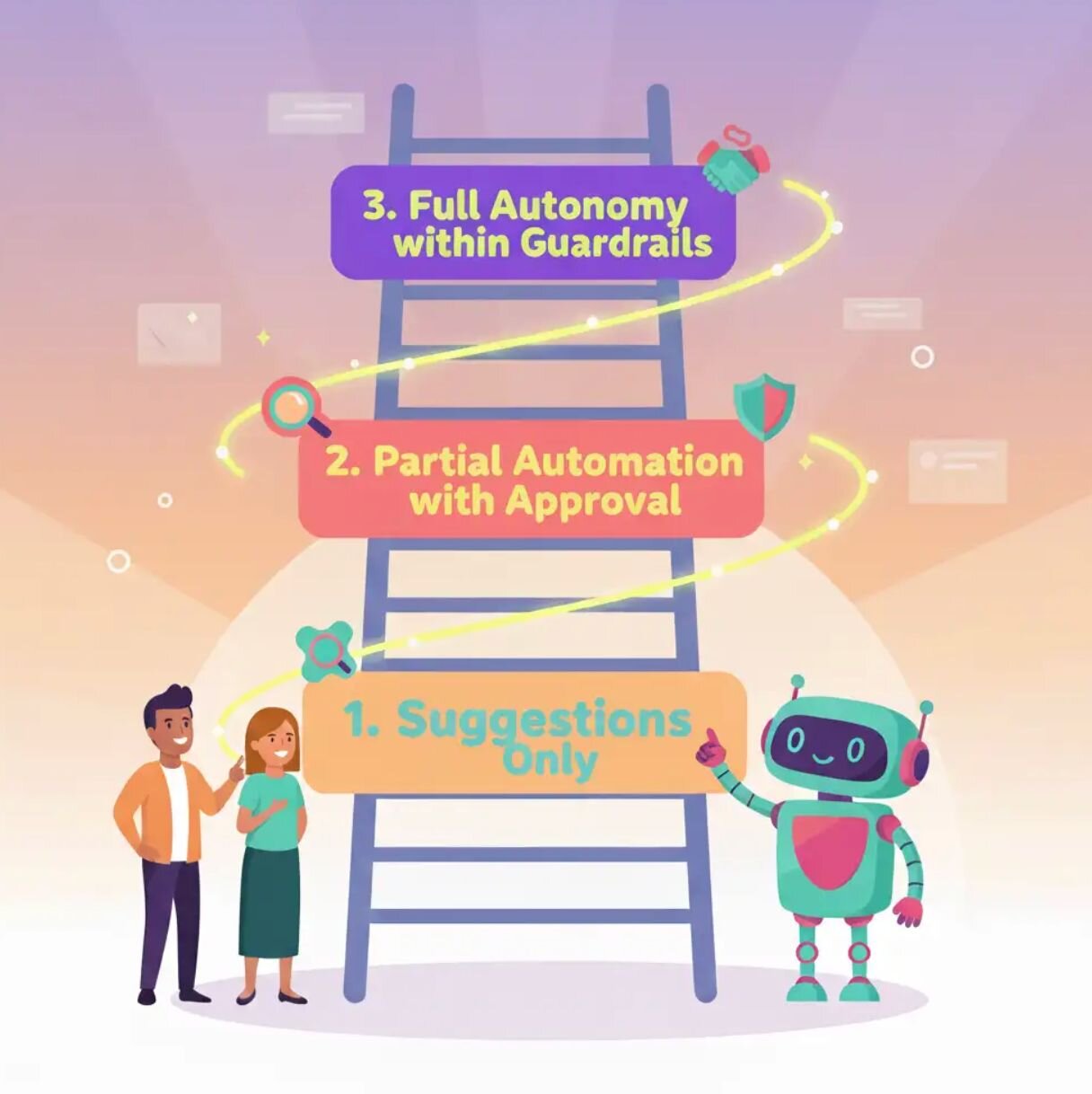

AI PMs should think about agentic products through the lens of a ladder of autonomy. On the first rung, agents only suggest actions. On the second, they can take partial steps with approval. At the top, they act independently within defined guardrails.

Imagine you are rolling out an AI assistant for expense reports. At the start, the agent might just spot possible mistakes or remind people about missing receipts; humans still make the final call. If that works well, you could let it fill in some forms and hand them off for manager approval. Later on, if all this works, you can fully automate the process.

This helps PMs test the system, catch errors early, and build trust with users before giving full autonomy.

PMs gearing up to work on Agentic AI systems should keep in mind three things.

The first is knowledge: have a clear grasp of how agents use tools, memory, and context, so you can spot both opportunities and pitfalls.

The second is alignment: agree early on about the agent’s scope, its guardrails, and the points where humans need to step in.

The third is measurement: measure where the agent fails and how much users trust the system. Focusing on these areas keeps the product disciplined and increases the chances that the agent will deliver real, lasting value.

Keep reading

Surviving the perfect storm: How hardware PMs can beat the AI tax and trade tariffs

From repetitive work to real impact: A case study on building an AI recommendation for developers

Product managers' role in making AI/ML systems more relevant

The 2026 AI product strategy guide: How to plan, budget, and build without buying into the hype