When you search for something on an e-commerce site, do you ever pause at the top results and wonder: How many of these are paid ads, how many are regular (non-ad) listings, and why do some ads feel useful while others just feel like noise?

As a product manager at eBay, working at the intersection of Ads and Search ecosystems, I’ve spent a lot of time thinking about these invisible choices - because they affect how buyers feel, how advertisers and sellers succeed, and whether revenue grows in a sustainable way.

Today, the funnel that takes a buyer from search query to purchase isn’t just shaped by bids and keywords anymore. AI-powered retrieval, ranking models, and even emerging agent-like systems are influencing what shows up, when it shows up, and how it’s presented. Buyers are encountering more personalized, context-aware ads; sellers are experimenting with AI-generated content and bidding strategies; and platforms are constantly balancing short-term monetization against long-term trust.

That’s what makes this topic so relevant right now. In this article, I’ll walk through the buyer’s journey funnel - from query to click to post-purchase - and unpack the product decisions hidden at each stage. I’ll share where AI is helping, where it creates new risks, and what I’ve learned in practice about balancing user experience with business outcomes.

From query to click: The buyer’s search funnel

The journey starts with retrieval: pulling together a set of potential results from both regular listings (organic) and paid ads. What you allow into that pool matters. Do you let every seller who bids show up? Or do you filter early based on seller quality, reviews, inventory, or predicted satisfaction?

When we experimented with semantic retrieval in apparel ads, I noticed something interesting: buyers searching for “track shoes” started seeing results for “running spikes.” Technically relevant, but not what most people intended. It taught me that semantic breadth can feel impressive in demos but jarring in real use if it doesn’t match buyer intent.

Once you’ve got a pool, it’s time for ranking - deciding the order. Is it “highest bidder wins,” or do you weigh relevance and past performance? I’ve seen feedback from sellers groups, where the most frequently asked questions from top bidders are - why their ad wasn’t ranked first. From their perspective, it felt unfair. But as a PM, I knew surfacing a slightly lower bidder with better delivery times or higher ratings improved buyer trust. These are tough calls - sellers see opacity, while buyers experience trust.

Then comes user experience: how ads appear on the page. This is where the details matter most.

If ads aren’t clearly labeled, buyers feel tricked - and once trust is lost, it’s hard to rebuild. A 2023 Baymard study found that 54% of shoppers lose confidence if ads aren’t distinguished from regular listings, which shows how even a small design choice can shape perception.

It’s also about balance. In one test, we experimented with extra ads above organic results. The engagement numbers didn’t collapse, but feedback was harsh - buyers said search felt “like scrolling through a billboard.” It was a reminder that trust isn’t measured only in clicks; it’s measured in whether the experience feels respectful of a buyer’s time.

And finally, there’s the tension between personalization and predictability. AI makes it possible to tailor ads to each buyer’s behavior, but not everyone wants surprises. Some buyers prefer consistent results, especially for familiar products. Getting that balance right - between discovery and stability - is one of the hardest challenges for a PM in this space.

Metrics and quality checks are the hidden levers. What you choose to optimize for shapes the entire buyer experience.

Early in my career, I worked on systems tuned purely for clicks. Revenue spiked, but complaints did too - buyers felt misled. Over time, we have shifted focus to conversions. Growth slowed temporarily, but retention improved. It reinforced for me that optimizing for long-term value creates more durable outcomes.

Then there’s the question of bad ads - ones that look attractive but deliver poor experiences (wrong item, slow shipping, misleading copy). I remember reviewing reports from a small seller whose ads were filtered out after a string of late deliveries. They weren’t a bad actor - they had simply switched couriers and got unlucky. This scenario made me realize how strict filters protect buyers but can unintentionally punish honest sellers trying to recover.

What I’ve learned about AI in search ads

AI has been one of the biggest shifts in how search ads work, and working in this space has taught me lessons about both the opportunities and the risks.

One of the most powerful changes has been better matching. When we introduced semantic retrieval, buyers started seeing ads that felt more relevant even when the keywords didn’t line up - for example, a search for “couch” showing ads for “sofa.” That improved discovery, but I also learned how easy it is to overshoot. Widen the net too far, and suddenly buyers see results that feel random, which erodes trust quickly. My takeaway: filtering early, even with simple heuristics like minimum seller ratings, is one of the most effective ways to protect the experience.

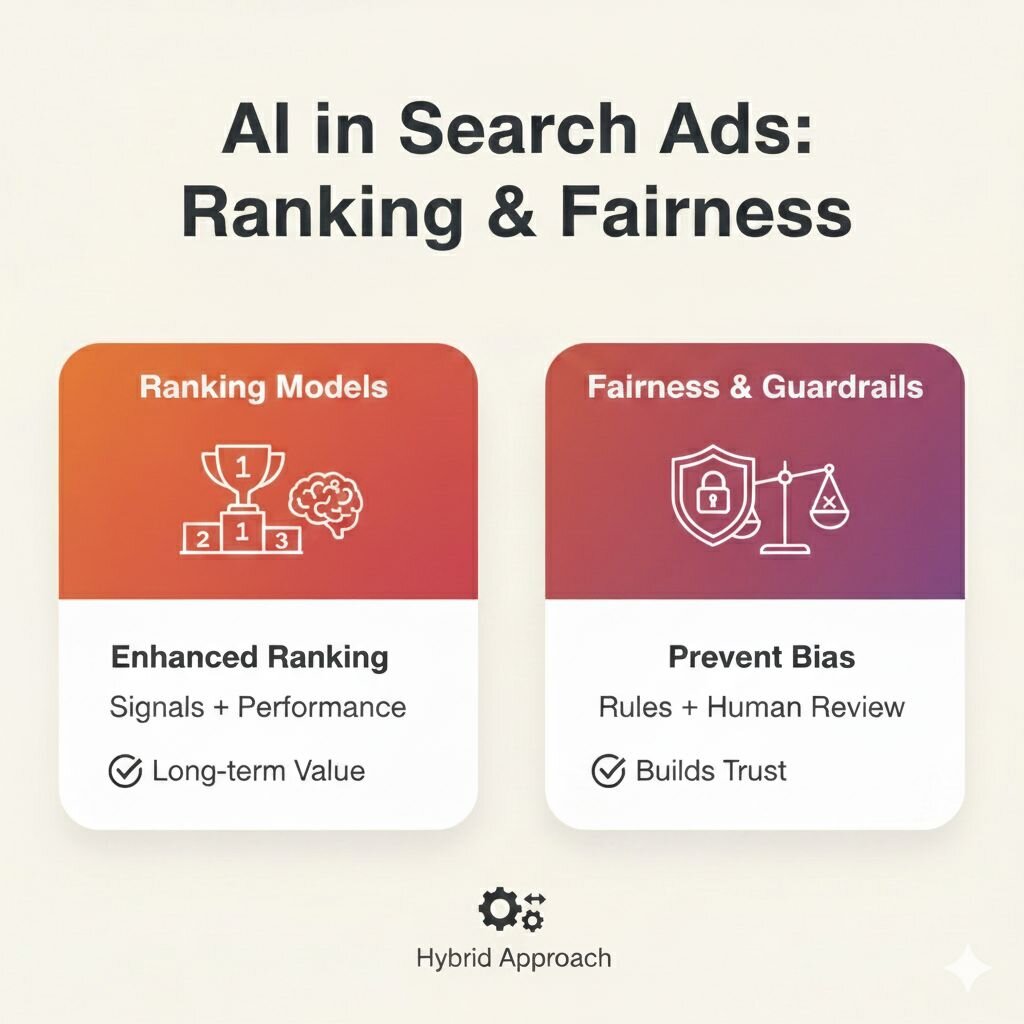

Another area is ranking. Models that combine dozens of signals - seller reliability, historical conversion rates, predicted intent - outperform pure bid-based approaches. But I’ve also seen how these models can “lock in” advantages for already-successful sellers. Without guardrails, the system risks becoming less fair, leaving new sellers at a disadvantage. I’ve found hybrid approaches - rules plus models - strike a better balance between fairness and performance.

I’ve also worked with adaptive ad loads, where the number of ads changes dynamically. In one experiment, showing fewer ads to first-time buyers created a cleaner experience and boosted retention. What stuck with me wasn’t just the numbers - it was buyer survey comments saying, “It felt easier to find what I wanted without being sold immediately.” Those words reinforced that ads aren’t just data points on a dashboard - they’re part of how people judge whether a platform respects them.

Finally, there’s explainability and transparency. Buyers and sellers are far more accepting of AI-driven results when you explain them. Even something as simple as: “Shown because you searched for X and this seller offers fast shipping” can build trust. Similarly, clear ad labeling - like “Sponsored” - is non-negotiable. One survey found that 76% of consumers felt more positive toward platforms that labeled ads clearly.

Stepping back, these aren’t just technical tweaks - they’re product choices. Optimizing only for clicks drives flashy ads but disappoints buyers. Shifting to conversion and retention slows revenue in the short term but builds loyalty in the long run. And if you don’t address feedback loops, high-performing ads will dominate simply because of visibility, not relevance. As PMs, we need to design with these dynamics in mind - because they determine whether the ad ecosystem thrives or collapses under mistrust.

Final thoughts

The buyer’s path from query to click might look simple, but every stage - retrieval, ranking, relevance, UX - hides tough product calls. Balancing revenue with user experience isn’t just a financial decision; it’s a trust decision. From what I’ve seen, the PMs who do this well tend to follow a few principles:

- Set clear optimization goals. Don’t just optimize for the metric that’s easiest to measure (like clicks). Start by asking: what outcome matters most for buyers and sellers in the long run? For some products, that’s retention. For others, it might be seller diversity or marketplace health. Make the goal explicit, and revisit it often.

- Use AI carefully, with guardrails. AI-driven retrieval and ranking can make results feel smarter - but it can also amplify bias or lock in advantages for incumbents. The best PMs pair models with simple, interpretable rules (like minimum seller rating thresholds) to ensure fairness and transparency. A hybrid system often performs better and earns more trust.

- Design for monetization and delight. Too often these are treated as trade-offs, but they don’t have to be. For example, showing fewer ads to first-time buyers created a cleaner experience and improved retention. If you frame monetization experiments through the lens of buyer respect, you’ll often find opportunities where business outcomes and user delight align.

- And maybe the most underrated one: listen to the qualitative signals. Metrics tell you what’s happening; user comments tell you why it matters. In my experience, phrases like “it felt like scrolling through a billboard” or “it was easier to find what I wanted” are some of the clearest indicators of whether your monetization choices are building trust or eroding it.

So I’ll leave you with this: in your own product, are you optimizing your ad systems for clicks or for long-term trust?