What I learned from judging 450+ innovation submissions and how you can use the same lens to make better product calls

As product managers, we are constantly playing judge. Every roadmap review, every feature prioritization, every conversation with a founder pitching a partnership requires us to make calls about what deserves time, budget, and organizational attention. Should we greenlight this experiment? Is that startup partnership worth pursuing? Which hackathon demo deserves to move from "cool prototype" to "real investment"?

Most of us make these decisions by instinct. We rely on gut feel, the polish of a deck, or the energy of a team. But what happens when instinct isn't enough?

Over the past several years, I've evaluated submissions for international awards like the Stevie Awards and Globee Awards, reviewed technical papers for IEEE conferences, and advised accelerators and hackathons. In total, I've judged more than 450 innovation submissions spanning fields from AI to e-commerce to customer experience. What surprised me most was how quickly patterns began to emerge. The ideas that consistently stood out shared deeper qualities that separated the winners from the also-rans, qualities I should be applying more systematically in my day-to-day product work.

What follows is the framework I developed from those experiences, designed to help you and your teams make better product decisions every day.

Why Learning to Judge Innovation Matters

Every product manager is in the business of judgment. Each week, we make calls that ripple through our companies: Which customer problems deserve engineering time? Which features are truly needle-movers? Which bold bets are worth the risk of failure?

Getting better at judging innovation develops three critical superpowers:

Discernment: the ability to separate genuine breakthroughs from buzzword-heavy pitches and flashy demos. This skill becomes particularly vital in today's climate, where generative AI has made it easy to dress up incremental ideas as radical reinventions.

Pattern recognition: building a mental library of what has worked (and not worked) in the past, so you can predict what is likely to succeed.

Influence: the credibility to articulate why an idea is worth pursuing, backed not just by intuition but by a structured framework that stakeholders can rally around.

After months of judging IEEE submissions, I began to notice how my instincts at Amazon sharpened. The act of judging outside ideas, over and over again, recalibrated my internal compass for what was truly innovative.

The Four Pillars of Great Innovation

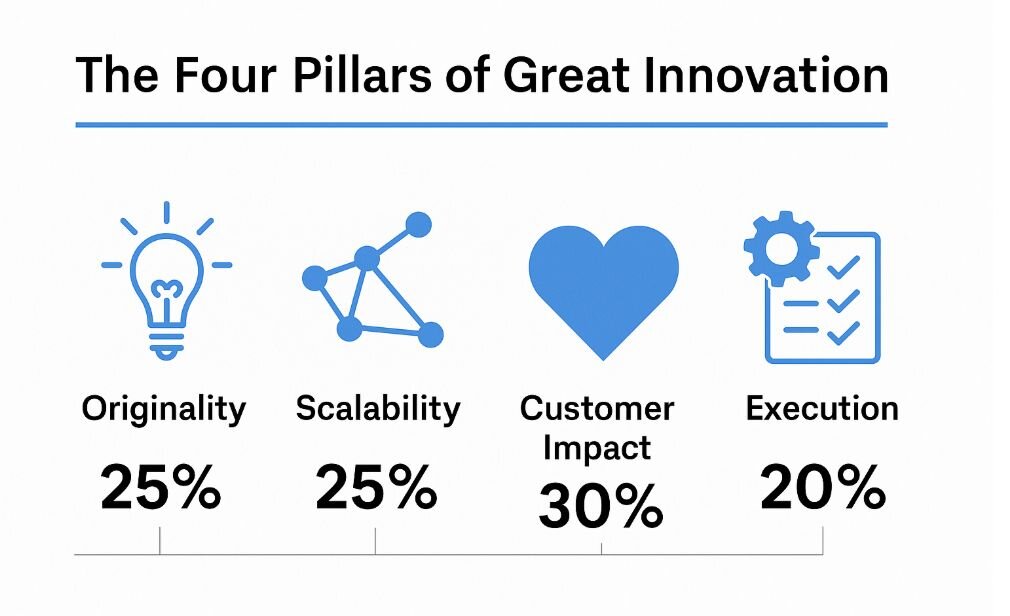

After hundreds of evaluations, four dimensions consistently separated the winners from the wannabes: originality, scalability, customer impact, and execution. I weigh them at 25%, 25%, 30%, and 20% respectively, though the exact mix depends on context.

Source: Generated with ChatGPT

1. Originality (25%)

The first question: Is this actually new? Not new in the sense of being built with the latest framework, but new in the sense of reframing a problem or combining familiar elements in surprising ways.

True originality pushes boundaries into uncharted territory, reframes old problems from fresh angles, and integrates known components in ways that produce emergent value.

I've learned to be wary of submissions overloaded with jargon. "AI-powered blockchain for metaverse commerce" that collapsed into conventional apps on closer inspection. If you cannot explain what is new in plain English, it probably isn't. By contrast, one IEEE paper I reviewed used well-known motion analysis techniques for a completely new application: non-invasive seizure detection. The algorithms themselves were not novel, but applying them to this problem space created enormous potential clinical value.

The Generative AI listings tool at Amazon exemplifies this by making sophisticated listing automation available to hundreds of thousands of sellers across languages and geographies, lowering barriers for small businesses while improving catalogue quality at scale.

2. Scalability (25%)

Many ideas look brilliant in a controlled demo but fall apart in the real world. The question: if this works, what would prevent it from becoming huge?

Scalability has several dimensions: market dynamics (can this expand across geographies and customer segments?), technical architecture (can the system support 10x or 100x growth?), and organizational replication (can other teams adopt it?).

At Amazon, the Sub Same-Day Delivery program enabling delivery speeds as fast as 7 hours demonstrated this principle. By designing co-location strategies and AI-driven inventory optimization into the core architecture, we built a model that scaled across multiple regions. Scalability was not a bolt-on—it was baked in from the start.

3. Customer Impact (30%)

The third pillar, and the one I weigh most heavily, is customer impact. Technology without impact is just a parlor trick.

Customer impact has both quantitative dimensions (conversion lifts, adoption rates, engagement improvements, cost or time savings) and qualitative dimensions (solving painful and widespread problems, creating genuine delight, or expanding access for underserved groups).

The most successful products in history have not always been the most advanced technically, but they made people's lives better. The iPhone triumphed because it made smartphones usable for ordinary people. Facebook won because it made social connection effortless.

The Rufus GenAI Shopping Assistant exemplifies this. Not only has it been adopted by millions, it also created entirely new ways for customers to discover products that were not possible with search alone. It changed shopping from transactional to conversational.

4. Execution (20%)

Ideas are cheap; delivering them at scale is what matters.

Execution is about whether a team has the discipline, experience, and foresight to handle the unglamorous realities: security, compliance, edge cases, localization, operational playbooks. It is also about adaptability—how teams respond when users do something unexpected.

I pay close attention to track record. Has the team shipped similar products before? Do they proactively surface the difficult parts? Do they provide evidence of adoption and customer value?

Putting the Framework to Work

Frameworks only matter if they are usable. The key is adaptation. At Amazon, we differentiate between "one-way door" decisions (irreversible and high stakes) and "two-way door" decisions (reversible experiments). For one-way doors, we weigh scalability and execution more heavily. For two-way doors, originality and customer impact matter more.

Applied day to day, this framework creates transparency. Instead of endless debates about which feature "feels" more important, teams can score options across the four pillars. It won't make the decision for you, but it will make the reasoning clear and the trade-offs explicit.

Common Traps to Avoid

After reviewing hundreds of innovations, I've noticed the same mistakes appear repeatedly:

The "Shiny Object" trap: Falling for flashy new technology without considering fundamentals. Google's graveyard of discontinued products (Google Glass, Google+, Google Wave) shows that even the most innovative companies fall into this trap when they prioritize technology coolness over customer value.

The "Perfect Pitch" trap: Being swayed by polished presentations that hide weak execution capabilities. Always dig into the details by asking for technical architecture diagrams, customer references, and usage analytics.

The "Incremental Dismissal" trap: Overlooking solid improvements because they're not revolutionary. Amazon's success largely comes from systematic incremental innovation. Sometimes a 10% improvement across millions of users beats a 100% improvement for a tiny niche.

The "Local Optimum" trap: Focusing only on your immediate market without considering broader applicability. Global thinking leads to bigger wins, but it also requires different execution capabilities.

Why This Makes You a Better PM

Becoming a better judge of innovation made me a better product manager in unexpected ways:

Broader perspective: Seeing dozens of approaches to similar problems expanded my solution toolkit exponentially. When facing challenges at work, I now have a richer set of patterns to draw from.

Higher standards: Understanding what truly excellent innovation looks like raised my bar for everything we build. Once you've seen innovation that genuinely transforms user experiences, it becomes harder to settle for "good enough."

Better communication: Having a structured way to evaluate ideas made me much more effective at explaining product decisions to executives, engineers, and stakeholders. Instead of relying on intuition, I could articulate specific reasons why one approach was stronger than alternatives.

Community connection: Participating in the broader innovation community through judging and peer review has created valuable professional relationships and learning opportunities that provide early signals on emerging trends.

Keep reading

The brandbook MVP

Resetting product priorities with a strong UX vision

Your product community is in the wrong place: Why in-app integration matters

Why ClimateTech products fail at deployment