As product managers, we're all familiar with stakeholder management: identifying who cares, who influences, and who needs what. But when you're building AI products, the game changes. AI sparks intense emotional responses that can make or break your product's success. Traditional stakeholder maps fall short here.

I learned this firsthand when working on a remote monitoring system with an AI

chatbot feature designed to support patients between doctor visits. That chatbot was

supposed to be supportive and empowering, but as a Product Manager, I found

myself drowning in conflicting emotional responses:

- Users felt skeptical about trusting AI with their health concerns

- The CTO was deeply concerned about data security and liability

- The Founder was extremely excited about AI capabilities and competitive advantage

- The development team felt uncertain about technical implementation

This wasn't just feedback. It was an emotional storm that paralyzed our decision-making process. Traditional stakeholder analysis focuses on influence and interest, but it doesn't account for the emotional intensity that AI products generate. I needed a way to visualize and navigate this emotional complexity before we could move forward effectively.

That's when I developed what I call the "Emotional Radar" – a practical tool for mapping and managing the emotional landscape around your AI product.

The Emotional Radar plots stakeholders across two crucial dimensions:

- Trust Level: How much confidence they currently have in your product/team

- Emotional Investment: How much emotional energy they need to engage effectively with your product

This creates four distinct zones, each requiring different communication and design strategies.

How to build your Emotional Radar step-by-step

Step 1: Identify your stakeholders

Start with listing everyone who influences or is influenced by your AI product – both internal and external stakeholders.

Step 2: Assess trust and emotional investment

For each stakeholder, score them on a scale of 1-10 for:

- Current trust level: How much do they trust your product right now?

- Emotional investment required: How much emotional energy do they need to engage with your product?

For example, for our AI chatbot, users were a 3 on trust level (skeptical) and a 7 on emotional investment (high anxiety about health data). The founder was an 8 on trust (excitement) and a 9 on emotional investment (deeply invested in AI's strategic value).

Step 3: Document specific emotions

Go beyond a general "good" or "bad". Pinpoint the specific emotions driving their behavior. Is it excitement about innovation, fear of privacy breaches, skepticism about accuracy, or uncertainty about job security?

For example, instead of "users are negative," we noted "users feel skepticism about data privacy."

Step 4: Plot and analyze

Place each stakeholder on your radar based on their trust/investment scores. You'll quickly see patterns emerge and identify which "emotional zones" are most populated.

How the Emotional Radar transformed our product strategy

When we mapped the emotional landscape for our AI-driven mental health support

app, we discovered that our biggest challenge was more on the emotional side

rather than on the technical one. Here's how the insights drove our product decisions:

1) Prioritizing development efforts

When your engineering team asks "What should we build next?", the Emotional Radar provides clear guidance. Prioritize features that build confidence.

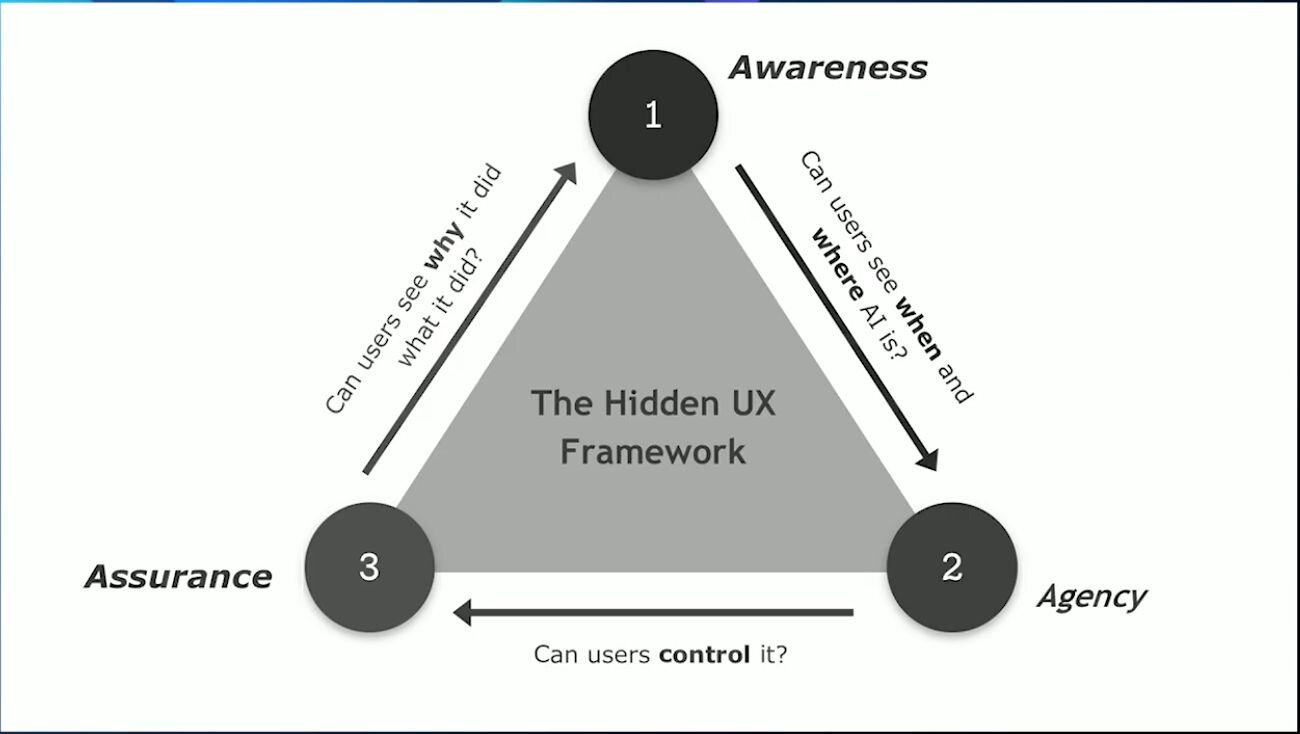

For example, for our AI chatbot, we saw many users in the trust building zone – these stakeholders have low trust and high emotional investment (e.g., skeptical users). Instead of adding more complex AI features, we focused on transparency features like clear disclaimers about AI limitations, easily accessible human support escalation, and a "why this answer was given" explanation for AI responses. This directly addressed their anxiety.

2) Navigating stakeholder conflicts

If different stakeholders want opposing features, map their positions on the Emotional Radar to understand the underlying emotional drivers.

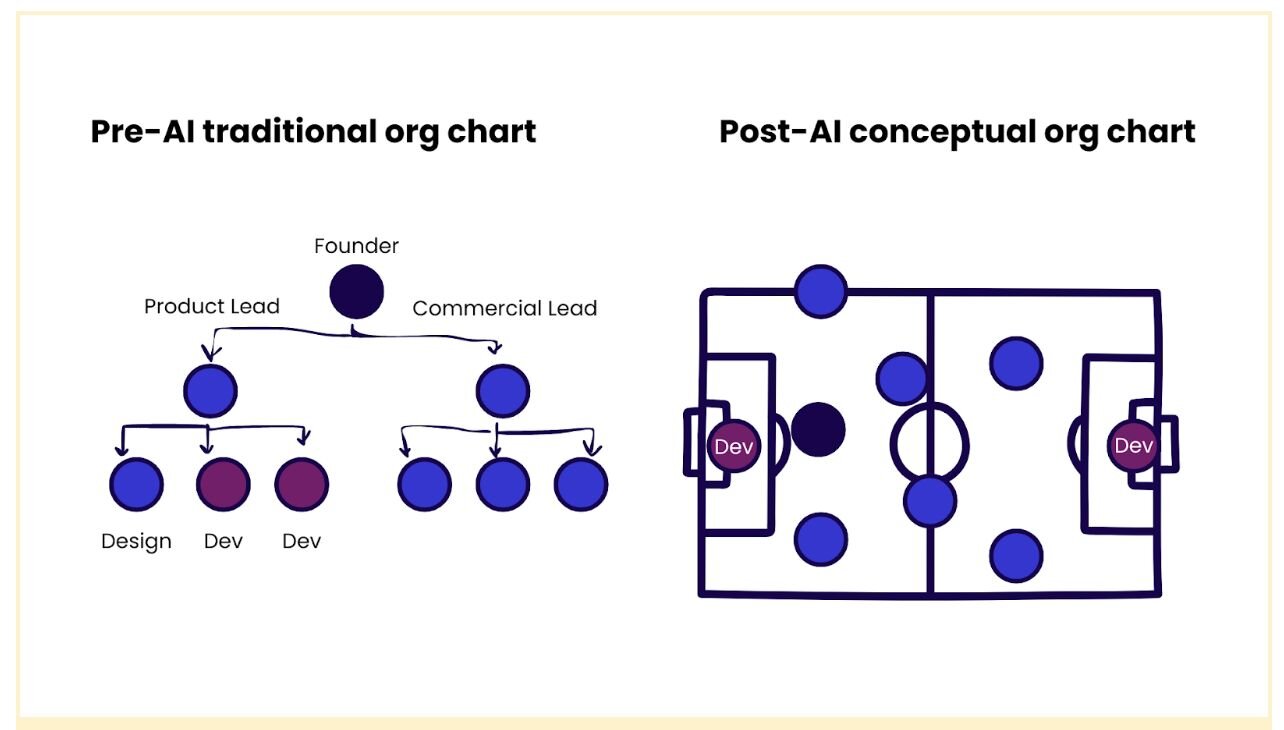

We had a conflict between the founder (Advocacy Zone, wanted rapid feature release) and the CTO (Trust Building Zone, prioritized security). Their emotional needs clashed: the founder's excitement for market leadership versus the CTO's fear of liability. This led to introducing a dual-track development approach when a core, more conservative AI feature was released quickly for initial user testing (addressing the CTO's security concerns). And a more advanced version has been in development for strategic partnerships (addressing the founder's ambitions). This allowed us to satisfy both emotional drivers without paralyzing progress.

Common pitfalls and how to avoid them

Pitfall 1 – Static mapping

Your Emotional Radar isn't a one-time exercise. Update it monthly and track stakeholder migration. Emotions are fluid, and your product strategy should be too.

Pitfall 2 – Zone stereotyping

Don't assume everyone in a zone is identical. Use zones as starting points, then dive deeper into individual emotional drivers.

Pitfall 3 – Team resistance

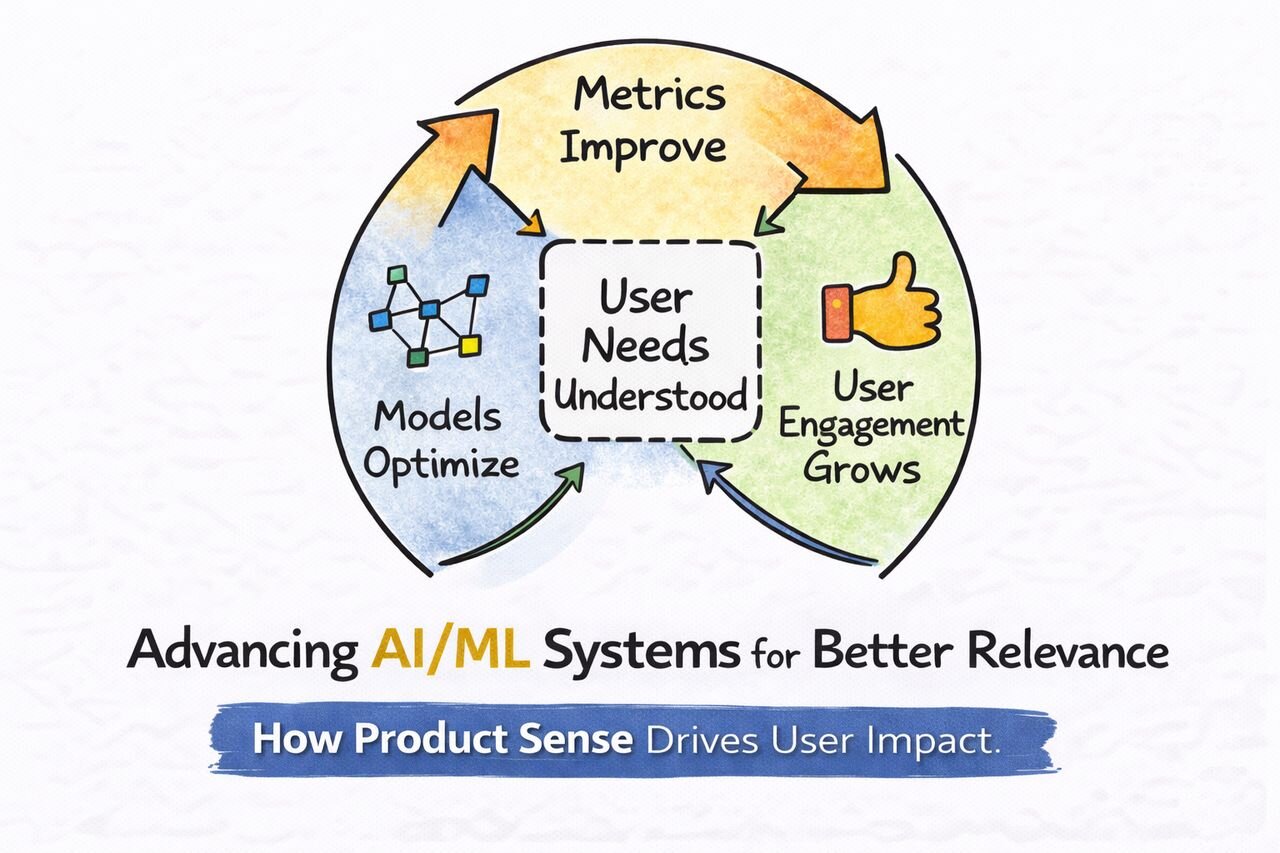

Show your team how emotional intelligence directly impacts technical metrics like adoption, retention, and support costs. When users trust your AI, they use it more, leading to better data for model training and fewer support tickets.

As AI becomes an integral part of our products, the ability to navigate emotional landscapes is no longer a soft skill. It's a core product management competency. The stakeholders who feel understood and supported by your AI product become your strongest advocates. Those who feel ignored or misunderstood become your biggest obstacles.

The Emotional Radar isn't just about building better AI features; it's about building sustainable AI products that people trust, adopt, and advocate for over time.

In the age of AI, the most innovative feature you can build might just be empathy.