AI is a force multiplier with a promise to make hiring faster, cheaper, and painless. But how far can we go in automating human judgment?

What We Know About AI and Bias in Hiring

Recently, Harvard researchers spent over 18 months looking into hiring practices of over 2,400 companies, tracking 37,000 hiring decisions, processing 1.2 million candidate profiles, and testing 23 AI-powered recruitment platforms. What they found is what many of us guessed from the beginning: AI hiring systems discriminate against qualified candidates for one or another reason.

Some typical types of bias embedded in AI hiring tools are gender bias (prioritizing male candidates over females based on male-coded language or experiences); age bias (discriminating against older candidates,); racial bias (discriminating against candidates from certain racial backgrounds, for instance Blacks or Hispanics); education bias (prioritizing candidates with Ivy League degrees); and disability bias (for example, filtering out candidates who disclose gaps in employment due to medical conditions). In a standout case in AI-based hiring bias, in 2018, Amazon had to discard its internal AI recruiting tool after discovering it penalized resumes that included the word “women’s” (for example, “women’s chess club captain”).

Interestingly, in most cases, AI hiring platforms are aware of the problem. Finding out that a tool is biased is much easier than weeding out the bias. It’s not that AI is itself choosing to be biased; it’s just being obedient.

An AI-powered hiring tool, like all AI tools, mirrors or even amplifies the data patterns it’s fed. So, when a system studies that most people currently in jobs come from certain educational or racial backgrounds, the system “learns” to favor those patterns. And, there may not always be a quick fix.

Just to be clear, I am not against using AI in hiring at all. What I mean is that the risks exist along a spectrum, and they vary widely depending upon where and how you choose to apply AI in the hiring process.

Can AI create immense value for product managers in this vertical? That’s a no-brainer. But to what extent can we automate human judgment without exposing ourselves to the not-so-pleasant sides (example: built-in bias) of the tools?

Let’s look at how we can strategically use AI that supports, rather than replaces, human complexity in hiring, and how we can minimize bias.

How Companies Are Using AI in Hiring Today

AI has already made inroads deep into how hiring software is designed today. Based on my experience in the industry, I have observed that we can group these AI applications into three broad levels, each carrying a different degree of risk:

Level 1: Low Risk — Light AI (Content-Based Tools)

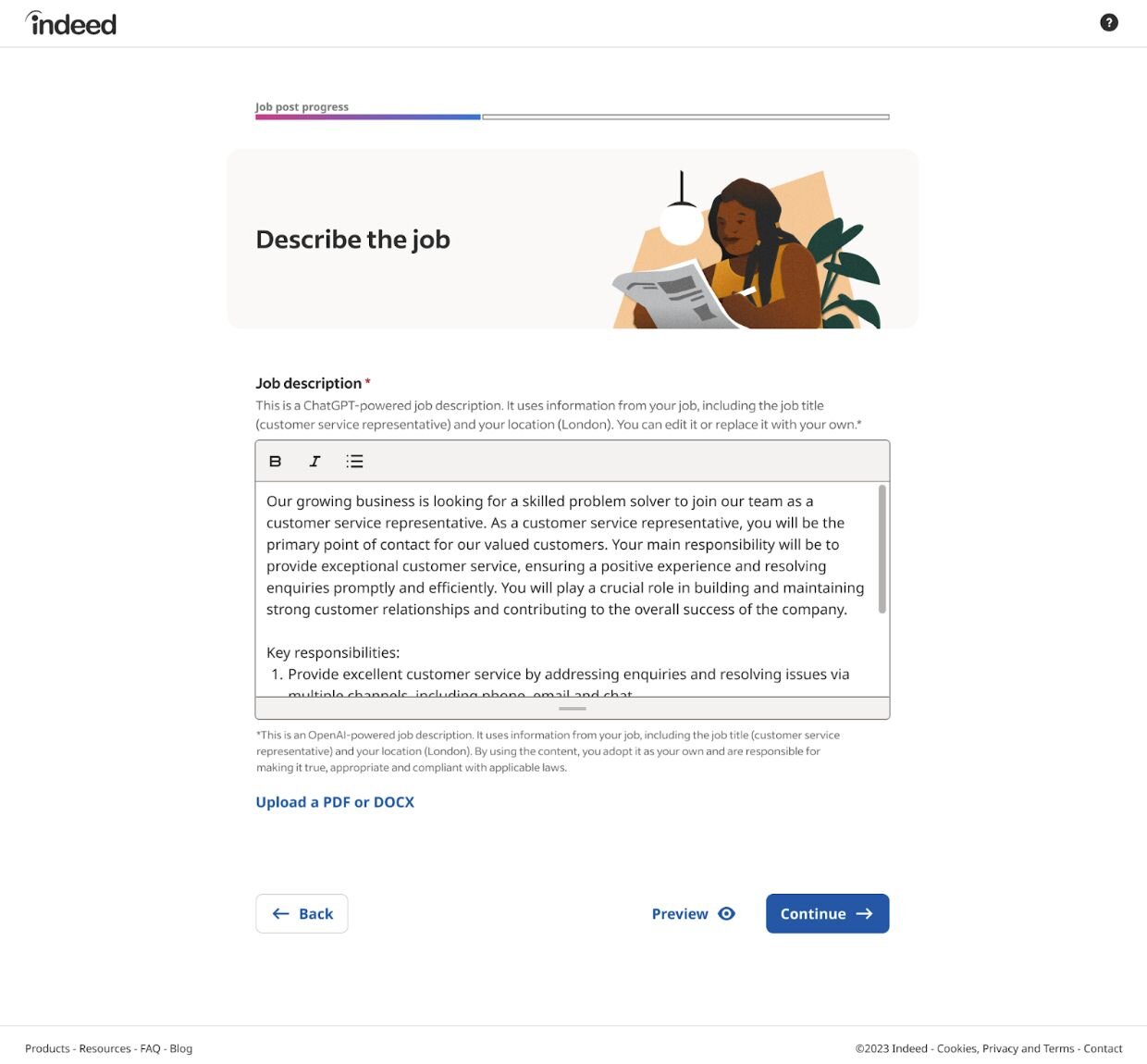

Because these tools don’t interact with candidate data at all, they are relatively safe from bias-related risks. Examples of Light AI range from simple tasks like generating job descriptions to more complex applications like creating an automated skills taxonomy. In these cases, AI analyzes large volumes of job postings, training materials, and labor market data to identify key skills, group them into categories, and map how they relate to each other. The result is a dynamic, structured framework that shows which skills are relevant to specific roles, how they connect, and how they evolve over time.

While light AI tools speed up hiring, it doesn’t really influence who gets hired by processing resumes and judging the suitability of candidates for specific jobs. This means the tool helps hiring managers move faster without influencing who gets hired. The goal of this design approach is to prioritize speed and efficiency while keeping the hiring decision entirely human.

Source: Indeed ChatGPT-powered job description feature

Level 2: Moderate Risk — Assistive AI

These tools take your AI-powered hiring system to the next level with more automation and a little more risk. Assistive tools help you filter for top candidates but leave the hiring decision to you.

For instance, AI can help you filter resumes based on keywords, experience, or certifications. AI can analyze your job description and recommend candidates who best match it. AI can prioritize candidates with AI-sorted lists.

While these tools are unburdening hiring managers in automated tasks that they would otherwise need dozens of hours, they shape, at least partially, which candidates are prioritized in hiring.

After all, it’s the AI that’s still calling the shots on who appears first in the filtered results. As these tools recommend candidates based on how well they match the job descriptions or certain keywords, they take a more sophisticated machine learning approach. On the flip side, this means they require regular testing for potential AI bias.

Level 3: High Risk — Decision-Making AI

In February 2023, Derek Mobley, an IT professional from North Carolina, sued AI-powered hiring platform Workday. He alleged that Workday rejected over 100 of his job applications in seven years because of his age, race, and disabilities. Since then, four other plaintiffs, all over the age of 40, have made similar allegations against the company.

Together, they submitted hundreds of job applications through Workday, and each time, they were rejected. Sometimes, the rejections came within minutes. Apparently, according to the complaints, Workday’s algorithm “disproportionately disqualifies individuals over the age of forty (40) from securing gainful employment”. Making things worse for Workday, in 2024, a federal court allowed the case to move forward as a potential class action.

Cutting a long story short, Workday was heavily relying on decision-making AI. Decision-Making AI tools are stretching the capabilities of existing AI tools to the riskiest zone. These tools automate hiring end-to-end; plus, they recommend, score, or reject candidates without someone having to look at how these tools make decisions.

Another example of heavy reliance on decision-making AI in recruitment was HireVue’s use of AI-based facial analysis in video interviews. The platform, however, got rid of the facial analysis component from its screening after facing a public backlash.

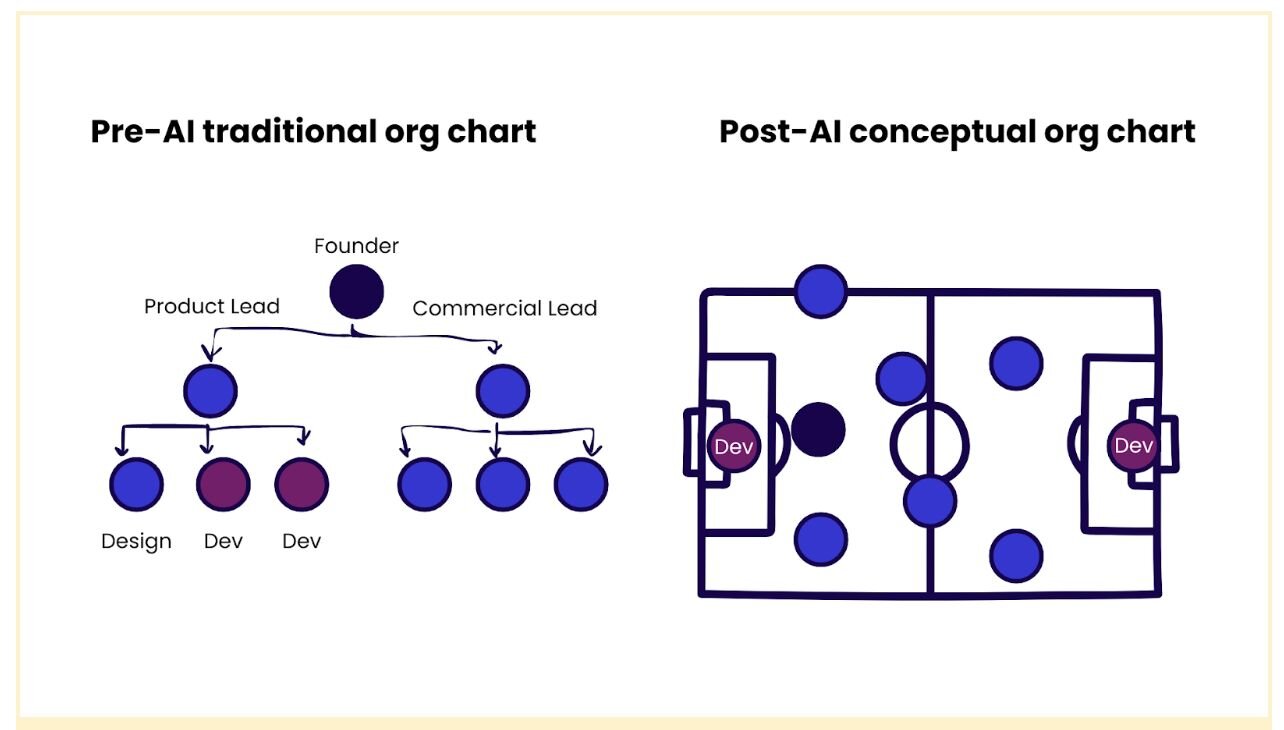

So, what can you do about it as a product manager?

If you’re building a recruitment tool, the design choices you make will decide how much bias shows up in the system. But even before making those design choices, we need to recognize the biggest challenges and frictions product teams face when building AI hiring tools. The first problem often starts with the data itself. Most hiring datasets reflect existing imbalances. These are patterns of who was hired, promoted, or overlooked in the past. Instead of correcting these inequities, models trained on such data often reinforce the same, introducing bias into every decision.

Even if the data looks solid, LLMs bring another complication: they don’t always know when they are wrong. So, they can misinterpret resumes or score candidates unfairly, all while sounding completely confident. The trickier part is that attempts to make models more transparent don’t always help either. Because chain-of-thought reasoning, where AI explains its steps, can make bad logic sound more persuasive. A candidate who took a career break for caregiving or retraining might be flagged as inconsistent, and the explanation, though flawed, may appear rational enough to mislead hiring teams.

Sometimes, the errors may be entirely fabricated. To fill in gaps, AI models often invent details, creating inaccurate summaries or interview feedback. A tool might claim a candidate “lacked leadership skills” even when their resume mentioned years of managerial experience.

The risks can escalate further when we automate rejections. Allowing a model to disqualify applicants without human oversight can hardwire biases, eliminate transparency, and expose organizations to discrimination claims.

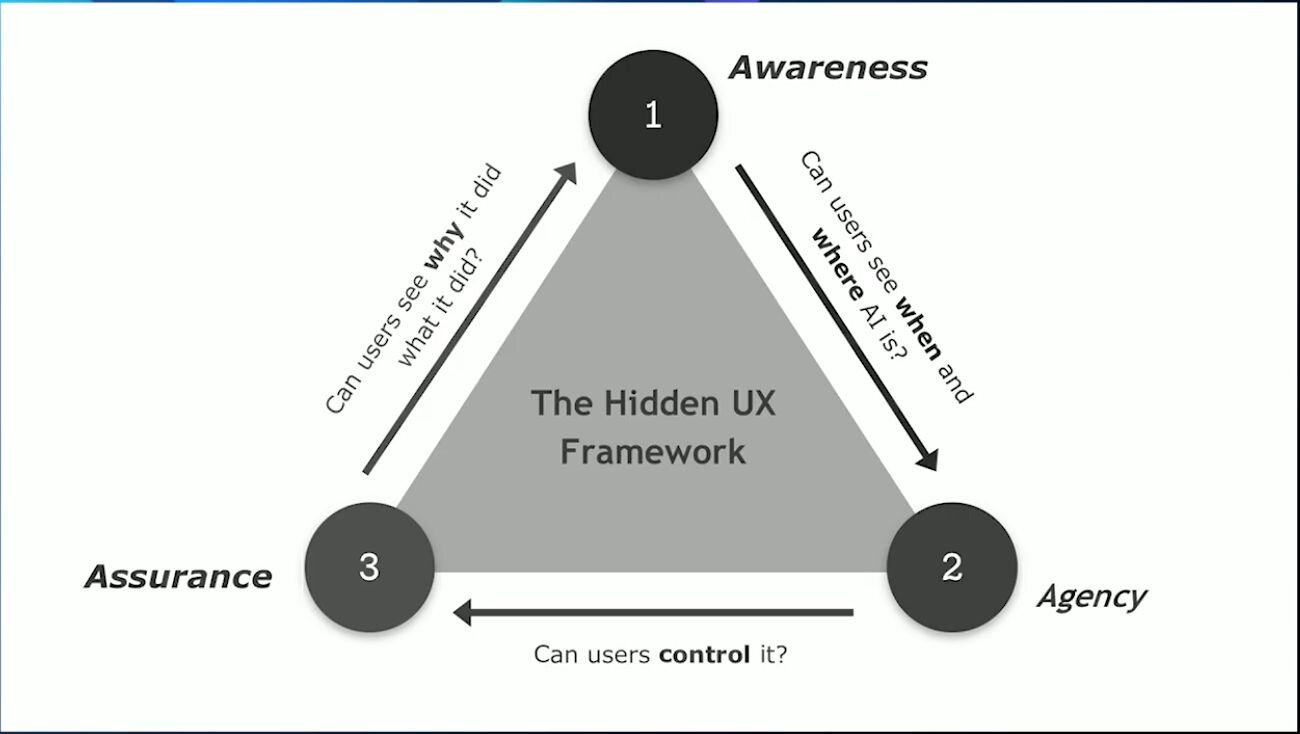

Finally, there is the tension between accuracy and explainability. Complex models may promise sharper candidate matching, but if recruiters and applicants cannot see why decisions were made, it’s hard to establish trust. This means hiring is probably too sensitive to leave in the hands of black-box systems.

The real question now is how to build recruitment tools that address these risks without letting them overshadow the product.

First, start with content-based tools and not autonomous ones. Content-based tools, which mainly come under light AI, that we discussed above, support human judgment rather than replacing it. Recruitment is not a mechanized function, and there is a risk of over optimization, especially with the temptation of hundreds of new AI tools. Content-based tools speed up the process, post jobs faster, or summarize and sort quickly without auto-scoring or rejecting candidates.

Next, assume that some level of bias may creep in, even in light AI or assistive AI tools. For instance, a job description generated by text-based tools can have biased language. Inadvertent use of terms like “rockstar developer” or “aggressive negotiator” may subtly signal that you prefer masculine traits, which can potentially alienate more qualified female candidates or other underrepresented groups.

Now, let’s look at a slightly more active assistive use. Suppose you want to use a tool to sort candidates based on the length of their past work experience. On the surface, what these tools are doing may look very neutral; however, there is always a chance that the tools amplify their inherent bias from the patterns they learned.

What you can do is integrate regular checks for bias into your development process. Submit a diverse range of test resumes, rotate attributes like names, education, and age, and then verify the results: are the same queries consistently favoring specific profiles?

Third, try to keep the human touch alive in your recruiting tool. All said and done, hiring is a relationship-based and trust-driven process. So, we must build tools that empower people to build connections. Empowering human judgment needs to be at the center of a product design.

Let’s take a more actionable example for this: Instead of auto-rejecting resumes based on keyword mismatches, think of designing a “human-in-the-loop” review system where AI clusters applicants into groups (example: high match, partial match, non-traditional background) but leaves the final screening decision to the recruiter.

Lastly, educate your users. Let your users know about potential instances of bias (over and above vague disclaimers that nobody reads) so that they can make informed decisions. Embedding real-time education can be an effective method of warning your users about potential bias. If a search query contains potentially biased language (for example, “Ivy League” or “young and enthusiastic”), the tool should automatically generate warnings. Offering “inclusive search” suggestions can also lower instances of bias significantly.

Also, think of explaining to your users how an AI tool works behind the scenes to empower them to make better decisions.

Could we use AI to eliminate bias? And what would that look like?

Albert Einstein famously said, “You can’t solve a problem with the same level of thinking that created it.” Thinking in that line, may be the best way to tackle bias is not fixing it after it happens but stopping it from showing up in the first place. I am wondering: if we had AI that truly understands bias and is trained to prevent it, and could it also teach humans to be more fair?

In some ways, that’s already happening.

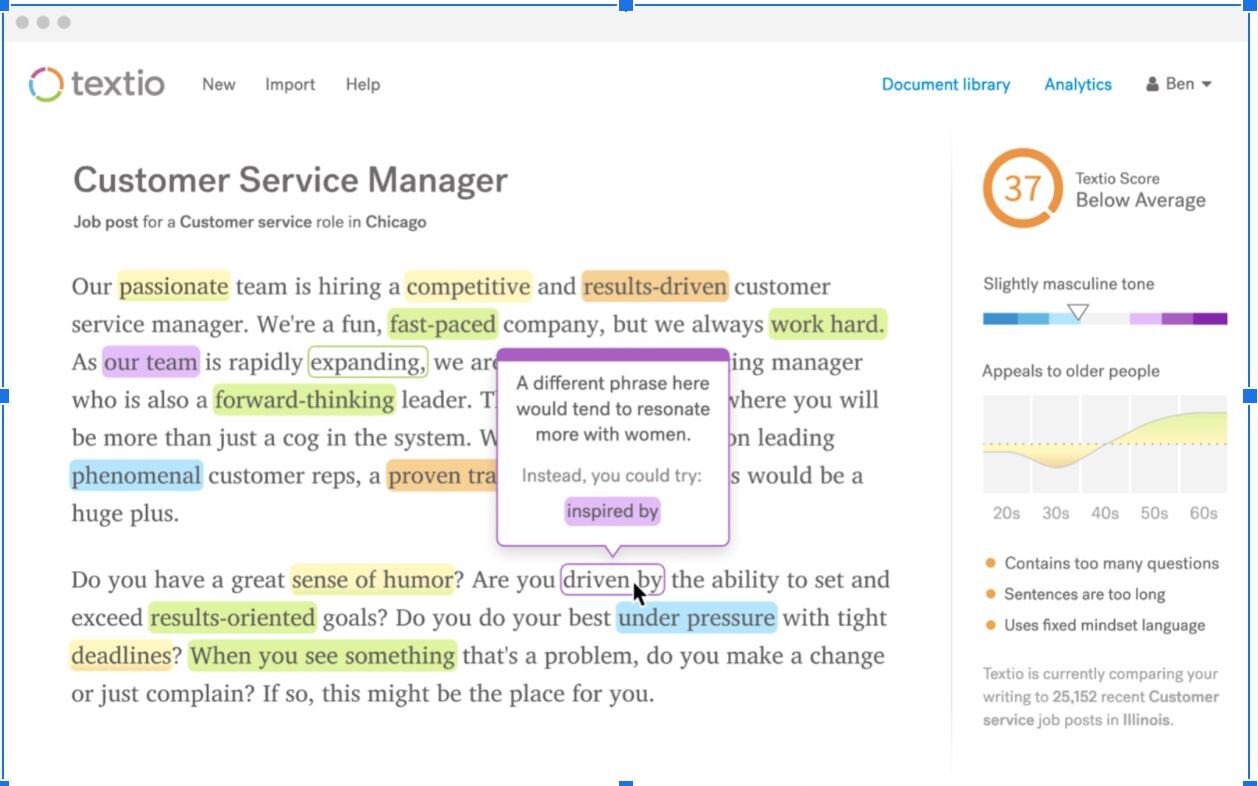

Textio, an NLP-powered platform nudges recruiters toward more inclusive, less biased job descriptions. Whenever the tool detects a potential problematic language in the job description, it highlights the issue and suggests alternative wordings in real time. For example, the AI tool flags non-inclusive or biased terms like blacklist, salesman, waitress, Ninja, rockstar, and guru and recommends replacing them with more neutral ones like blocklist, sales associate, expert, or specialist.

Source: Textio

Source: Textio

Another great example is Ashby. Whenever hiring managers apply filters that can potentially reinforce bias (example: filtering by specific schools or too narrow career paths), the tool generates a warning. For instance, in case a recruiter searches for candidates only from “top-tier” schools or within narrow age ranges, the tool prompts an alert for them to reconsider the filters.

Probably we can stretch this thinking even further. What if AI tools didn’t just react to biased inputs, but actively proposed more inclusive alternatives, flagged biased patterns over time, or even coached hiring teams on fairer practices?

Final thoughts

Companies need the best talent to grow, and the best talents come from a recruitment system that works. AI can speed up a lot of stuff and automate a few things without much of a problem. But, there is always a danger of over-optimizing, which can open that proverbial Pandora’s box: problems that you don’t want to encounter.

As product managers, our job is to shape technologies that work well with people and not reduce them to a few machine-readable patterns. What am I missing?