What if most AI failures were actually team design issues disguised as tech problems?

I watched Patrick Debois discuss Conway's Law and AI and a clear pattern came up. Conway's Law says companies design systems that copy their team structures. But Debois shows something interesting: AI is flipping this around. Instead of our organization shaping the technology, AI is starting to change how we organize ourselves.

This mindset shift lets us focus on "how" instead of "why" and we become managers of intent rather than just workers. When AI projects fail, the problem might not just be technical.

Why Your Teams Keep Hitting Roadblocks

Think about when AI projects stumble. The failures might come from these team patterns:

Data and modeling teams working in silos

- In my experience working with AI teams, the biggest obstacle isn't technical complexity, it's alignment. When teams don't share a clear understanding of what they're building and why, even the best models fail to create value. This aligns with what RAND notes that misunderstandings and miscommunications about the intent and purpose of the project are the most common reasons for AI project failure.

Research teams isolated from production teams

- Many teams focus heavily on models and overlook the supporting infrastructure, causing projects that run smoothly in dev environments to often fail under real-world conditions.

AI teams disconnected from business needs

- Teams make the early mistake of starting with a tool instead of starting with a real problem, treating technology as a magic solution when AI does not solve problems by itself.

Your org chart might be creating these difficulties.

The Reverse Conway Method

Conway's Law shows that team structure becomes system structure, but Debois shares another point of view: Reverse Conway's Law. Your current teams used to dictate your AI architecture. Now AI is changing how you organize.

This happens in stages. AI starts out as a co-pilot and helps with tasks. Then it becomes a team member with access to collective knowledge. Eventually, it might become a peer that can work alongside humans. At each stage, teams need different structures.

You need a deliberate approach to build teams that can adapt through these AI evolution stages:

Step 1: Define Your Desired AI Setup First

Draw your ideal AI system on a whiteboard. Include data flows, decision points, and user touchpoints. This becomes your team design plan.

Step 2: Build Teams Around Business Problems

Create a "personalization team" and "search team" instead of a "data engineering team" and "ML team."

Traditional functional structure:

- Data Engineering Team

- ML Research Team

- AI Infrastructure Team

Business-focused structure:

- Customer Experience Team (data scientists, engineers, product manager, designer)

- Growth & Retention Team (data scientists, engineers, product manager)

- Platform & Tools Team (ML engineers, infrastructure engineers)

Each team owns a complete user journey instead of a technical function.

Step 3: Ensure End-to-End Team Ownership

Every team should include all the roles needed for end-to-end delivery, with the caveat that AI might change team sizes. When AI handles more domain knowledge, teams might become smaller because you need less overlapping human expertise. However, this doesn't eliminate the need for human expertise in validating AI outputs and ensuring quality. It shifts human focus toward oversight and strategic guidance rather than routine execution. Here’s an example:

Practical setup for a Personalization Team:

- Product Manager (defines what actually works)

- Data Scientists (focus on intent, not just implementation)

- ML Engineers (supervise AI-generated solutions)

- Data Engineer (oversee data pipeline automation)

- Designer (ensure human-centered AI experiences)

The key shift: humans focus on "what actually works" while AI handles more of the "how."

To make this shift work, you need a systematic way to evaluate your current setup and design your target state. The following three-step assessment helps you move from identifying problems to implementing solutions.

The Three-Step Assessment

Step 1: Map Your Actual Communication Patterns

What to do: Document who really talks to whom, not what the org chart says should happen.

How to do it:

- Track meeting attendees for AI-related discussions over 2-4 weeks

- Survey team members: "Who do you need to talk to most often to get your AI work done?"

Output: A visual map showing actual collaboration patterns vs. official reporting lines.

Step 2: Identify Your Specific Bottlenecks

What to do: Follow one AI feature from idea to production and document every delay.

How to do it:

- Pick a recent AI feature that took longer than expected

- Map every handoff: Who passed what to whom, and how long did each step take?

- Categorize delays using these common patterns:

Common Bottleneck Patterns:

- The Data Handoff: Data requests sit in tickets for weeks

- The Model Translation: Models work in Python but production uses Java

- The Approval Loop: Simple experiments need multiple sign-offs

- The Knowledge Gap: Business needs get lost in translation

Output: A timeline showing where time was lost and why.

Step 3: Design Your Target Team Structure

What to do: Work backwards from user experience to define ideal teams.

How to do it:

- Begin by sketching the customer experience you're targeting

- Figure out what AI capabilities you'll need at different stages

- Form teams that can own these capabilities end-to-end

- Compare this to how you're currently organized and create your transition strategy

Output: A new team structure diagram with clear ownership boundaries.

Once you've completed your assessment, you can address specific issues with these targeted solutions.

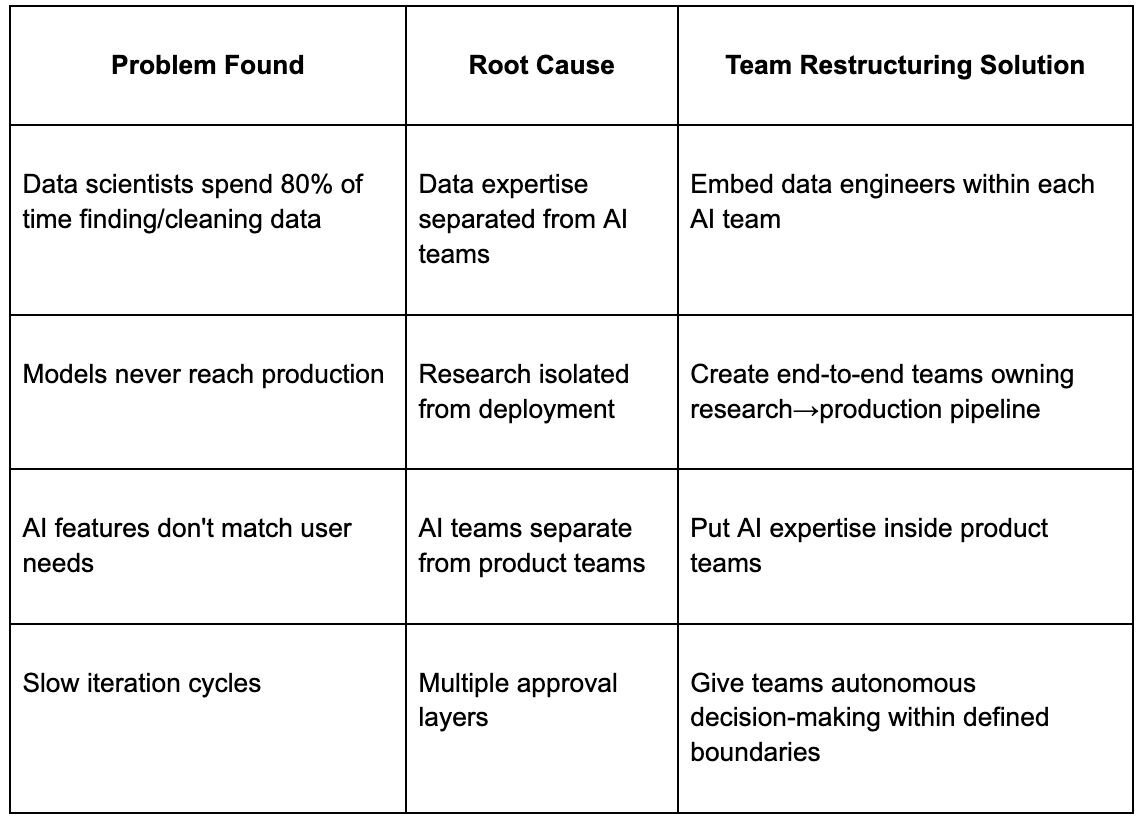

Implementation: Common Problems → Team Solutions

Use this section after you complete your assessment. Address specific issues you found:

For slow iteration cycles specifically: Create clear guidelines for what teams can deploy without extensive approvals. Low-risk changes like A/B tests and UI tweaks can go out immediately, while high-risk algorithm changes will still need review.

Measuring Success

Technical indicators:

- Increase AI model deployment frequency

- Shorten data pipeline lead time so people can access data quickly

- Reduce model inference latency and ensure models are production-ready

- Speed up rollout and experiments with feature flags

Organizational indicators:

- Increase cross-functional standup participation with daily collaboration across data/AI/product teams

- Reduce decision cycle time so AI feature approval happens in days instead of months

- Eliminate handoff delays and waiting periods between research and engineering teams

- Lower team cognitive load by reducing context switching between multiple approval processes

Business indicators:

- Reduce time-to-market for AI features so ideas reach customers quickly

- Increase adoption rates of AI features within the first month after release

- Attribute revenue gains to AI features to show impact

- Lower the number of support tickets related to confusing AI experiences

Structure as Strategy

Think about your last AI project that struggled. You probably blamed the model accuracy, data quality, or technical complexity. But what if your org chart was amplifying these problems?

Your AI problems are likely technical-organizational problems, since technology and teams co-evolve in reinforcing loops. Poor team structure leads to bad data practices. Bad data practices create technical debt. Technical debt makes collaboration harder. Harder collaboration worsens team structure.

When AI projects fail, it signals that both your technology and your teams need to adapt. The solution isn't choosing between technical fixes or organizational fixes. It's recognizing that advanced technology and effective processes must develop together.

Fix your teams, and you'll surface the real technical challenges that matter. Fix your technical infrastructure, and you'll enable the collaboration patterns that actually work. Do both, and you turn structural problems into competitive advantages.