At #mtpcon San Francisco, Leisa Reichelt, head of research and insights at Atlassian, took on our current approach to user research and how we take an evidence-based approach to doing completely the wrong thing.

Five years ago, the industry was focused on just getting people to do any user research. Product managers weren’t regularly talking to customers at all. These days, the drive for companies to be “customer obsessed” has brought about a new era where everyone is talking to customers. Designers, product managers, and researchers all think that doing using research is a primary function of their job. And while research is best when done as a team, how much time are we spending to grow our expertise in research so that we do it well?

While we should be out there doing research, we’re letting bad research pass for evidence, and we make decisions on data we shouldn’t be using for this purpose. We mess up research by trying to quantify things and make them seem more scientific than they really are.

Perspective and Truth

Looking at only parts of a picture can give a warped perspective, and we can see things that appear meaningful but which aren’t. We have to make sure we are getting a big enough picture in research to make a good decision. If you see the shadow of a duck, it can look very different from the duck itself. This happens in research as well. We are getting a narrow view of data and are making decisions based on the shadow. The shadow is real, but the duck is more real, and we have to broaden our context to get the full truth.

Common Research Mistakes

Customer Interviews

People can make up an opinion about anything, and they’ll do so if asked. Jakob Nielsen

Customer interviews are a common method of user research that teams mess up. The answers you get in interviews depend on the questions you ask, and by asking the wrong questions, you can get really excited about data that doesn’t matter at all. We ask feature-based questions such as “What is the thing you do most often in Jira?” and get narrow answers. We should be asking questions like, “What are you doing most often when you come to use Jira?” With broad context, you learn about the “why”, hear gaps and opportunities that the product may be missing, and can eventually dig deeper to probe into the features themselves.

To do better customer interviews:

- Start with wide context: don’t start with questions about your product

- Be user-centered, not product-centered: you should be able to use the same script for a competitor’s product

- Invest in analysis: get transcripts of the interviews, take the time to do affinity diagrams, and really get the full value from the research

Usability Testing

We make decisions based on usability testing all the time. A popular method that has emerged is “Unmoderated Remote Usability Testing.” With this type of testing, you use software that allows you to build tests, post them, and let people complete usability tests from anywhere on their own. It allows you to get a lot of testing done without ever talking to a user directly, but it comes with a lot of pitfalls.

With in-person usability tests, an experienced researcher is able to discern the difference in what a user says and what they do. They’re able to see body language that indicates when a user is frustrated, or see when a user only completed a task by randomly clicking around a screen. But with unmoderated usability testing, teams often simply download data rather than actually watch the videos of the tests; these things get turned into a simple metric of “task completed”, and quotes and ratings are pulled without the broader context.

To do better usability testing:

- Make tests task not feature-based: test what people would really be doing, not the feature you wish they’d use

- Watch the videos in real time: don’t just download the data from unmoderated usability testing

- Look beyond task completion: it’s not OK that people eventually get to the right outcome – the quality of the experience along the way matters

Surveys

Faith in data grows in relation to your to your distance from the collection of it. Scott Berkun

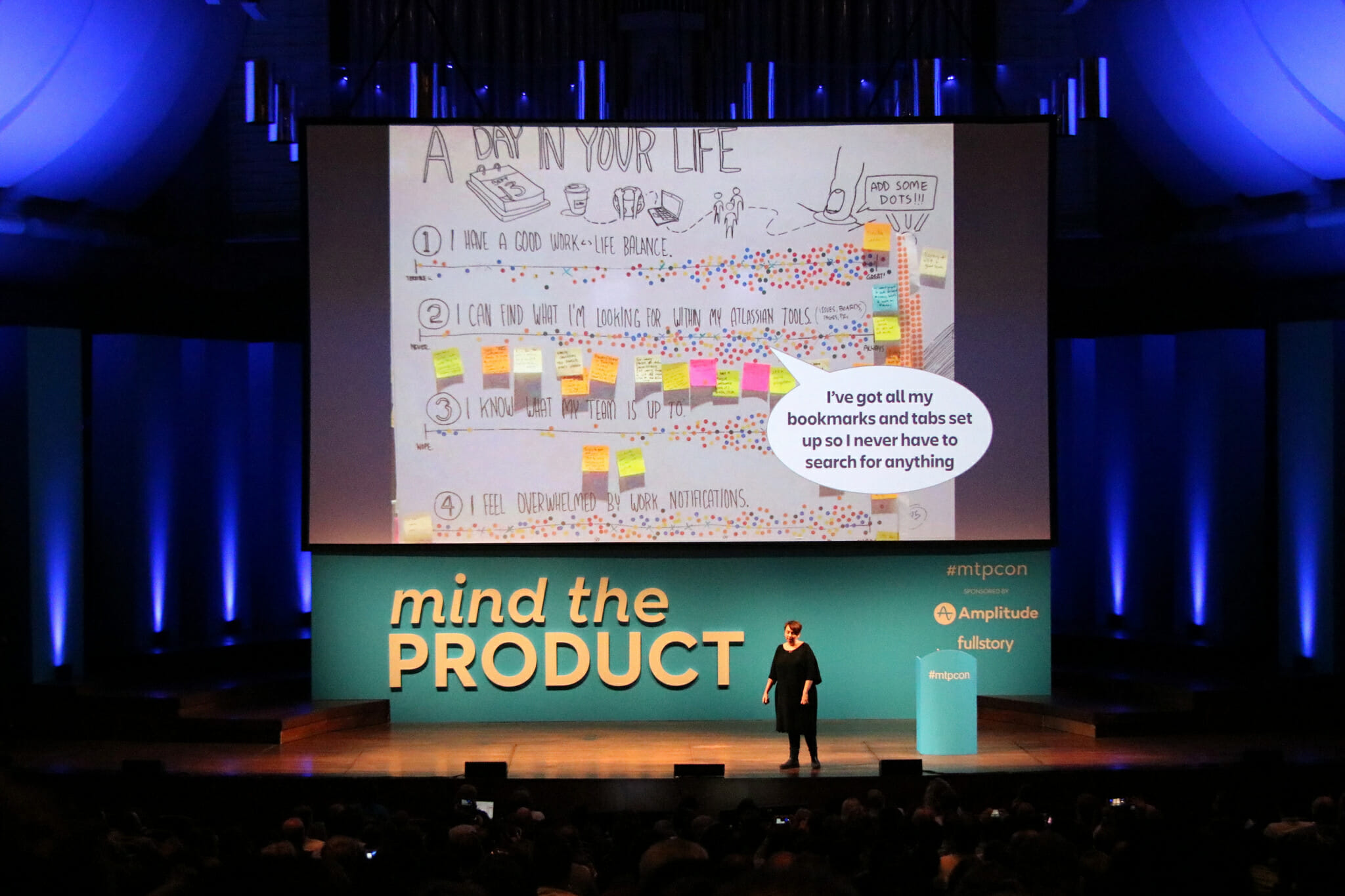

Product managers love surveys. The further away you are from the point of collection of data, the more you trust it. But misleading questions and a lack of context can provide data that tells a very different story from the truth. Leisa gave an example from Atlassian Summit, where a user said in a dot-voting survey that they had great work-life balance. When she asked them about their answer, they said “I can work from home, so even though I work too much, I have flexibility.” The context gives a different perspective from the data point. Just because you can put it in a graph, doesn’t make it science. We need to be way more critical about what data we trust.

To do better surveys:

- Use qualitative techniques before quantitative: do interviews or contextual enquiry before you design a survey

- Triple test your survey: make sure people understand questions consistently and are able to answer accurately

- Use humans to analyse words: don’t rely on automated text analysis. Read the feedback.

Stop Quantifying Qualitative Research

Why do we take these research methods and use them incorrectly? Leisa thinks the main reason is that we don’t have time. But if we claim to be “customer obsessed”, why are we trying to box research and empathy into the smallest amount of time possible? Take the time to do it right.

Leisa leaves us with the metric we should actually be aiming for. Rather than completing bad user research, just ask: “In the last six weeks, have you personally observed a customer, using your product?” If we’re going to make better products, the truth of our customers’ experience is what we need. And truth can’t be automated or graphed. It takes time, and it takes humans.

Comments 0